|

|

|---|

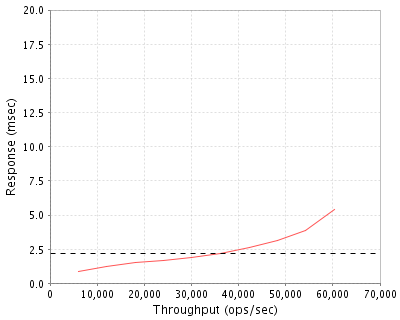

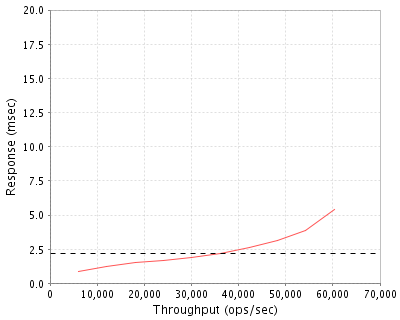

| NetApp, Inc. | : | FAS3160 (SATA Disks with Performance Acceleration Module) |

| SPECsfs2008_nfs.v3 | = | 60389 Ops/Sec (Overall Response Time = 2.18 msec) |

|

|

|---|

| Tested By | NetApp, Inc. |

|---|---|

| Product Name | FAS3160 (SATA Disks with Performance Acceleration Module) |

| Hardware Available | September 2009 |

| Software Available | August 2009 |

| Date Tested | July 2009 |

| SFS License Number | 33 |

| Licensee Locations |

Sunnyvale, CA USA |

The NetApp® FAS3100 series is the midrange of the FAS family of storage systems with our Unified Storage Architecture, which is flexible enough to handle primary and/or secondary storage needs for NAS and/or SAN implementations. The FAS3100 series features three models: the FAS3140, FAS3160, and FAS3170. Performance is driven by a 64-bit processing design that uses high throughput, low latency links and PCI Express for all internal and external data transfers. All FAS3100 systems scale to as many as 36 Ethernet ports or 40 Fibre Channel ports, including support for both 8Gb Fibre Channel and 10Gb Ethernet. These systems are housed in an efficient, HA-ready 6U form factor which allows for a shared chassis and backplane for dual controllers by sharing of common system power resources. The FAS3160, which was used in these benchmark tests, scales to a maximum of 672 disk drives and 672TB of capacity.

The NetApp Performance Acceleration Module (PAM) family provides a new way to optimize performance. Performance Acceleration Modules are optimized to improve the performance of random read intensive workloads such as file services. These PCI Express cards combine hardware and tunable caching software to reduce latency and improve I/O throughput without adding more disk drives. When multiple modules are configured in a single controller they function as a unified read cache. The FAS3160 system can be configured with up to two 256GB PAM II modules for a unified 512GB read cache in each of two controllers.

| Item No | Qty | Type | Vendor | Model/Name | Description |

|---|---|---|---|---|---|

| 1 | 2 | Storage Controller | NetApp | FAS3160A-IB-BASE-R5 | FAS3160A,IB,ACT-ACT,OS,R5 |

| 2 | 1 | Controller Chassis | NetApp | FAS3160A-CHASSIS-R5-C | FAS3160,ACT-ACT,Chassis,AC PS,-C,R5 |

| 3 | 4 | Disk Drives w/Shelf | NetApp | DS4243-0724-24A-R5-C | DSK SHLF,24x1.0TB,7.2K,SATA,IOM3,-C,R5 |

| 4 | 2 | SAS Host Bus Adapter | NetApp | X2065A-R6 | HBA,SAS,4-Port,3Gb,PCIe,QSFP,R6 |

| 5 | 2 | Gigabit Ethernet Adapter | NetApp | X1039A-R6 | NIC,2-port,GbE,Copper,PCIe,R6 |

| 6 | 2 | PAM Adapter | NetApp | X1937A-R5-C | ADPT,PAM II,PCIe,256GB-C,R5 |

| 7 | 2 | Software License | NetApp | SW-T4C-NFS | NFS Software,T4C |

| 8 | 2 | Software License | NetApp | SW-T4C-PAMII-C | PAM II SW,T4C |

| OS Name and Version | Data ONTAP 7.3.2 |

|---|---|

| Other Software | None |

| Filesystem Software | Data ONTAP 7.3.2 |

| Name | Value | Description |

|---|---|---|

| vol options 'volume' no_atime_update | on | Disable atime updates (applied to all volumes) |

N/A

| Description | Number of Disks | Usable Size |

|---|---|---|

| 1TB SATA 7200 RPM Disk Drives | 96 | 56.6 TB |

| Total | 96 | 56.6 TB |

| Number of Filesystems | 8 |

|---|---|

| Total Exported Capacity | 55.95 TB |

| Filesystem Type | WAFL |

| Filesystem Creation Options | Default |

| Filesystem Config | Each filesystem was striped across 12 disks |

| Fileset Size | 7031.1 GB |

The storage configuration consisted of 4 shelves, each wth 24 disks. All four shelves were daisy-chained such that the outputs of all shelves were attached to the inputs of the next shelf in the group. The first shelf had two 3Gbit/s SAS input connections, each connected to a SAS host bus adapter port on a different storage controller. The two outputs of the fourth shelf were routed back to SAS host bus adapter ports, one on each storage controller. Each storage controller was the primary owner of 4 shelves, with the disks in those shelves forming two disk pools or "aggregates". Each aggregate was composed of a single RAID-DP group composed of 10 data disks and 2 parity disks. Within each aggregate, a flexible volume (utilizing DataONTAP FlexVol (TM) technology) was created to hold the SFS filesystem for that controller. Each volume was striped across all disks in the aggregate where it resided. Each controller was the owner of four volumes/filesystems, but the disks in each aggregate were dual-attached so that, in the event of a fault, they could be managed by the other controller via an alternate loop. A separate flexible volume residing in one of the aggregates owned by each controller held the DataONTAP operating system and system files.

| Item No | Network Type | Number of Ports Used | Notes |

|---|---|---|---|

| 1 | Jumbo Frame Gigabit Ethernet | 4 | Dual-port gigabit ethernet PCI-e adapter |

There were two gigabit ethernet network interfaces on each storage controller. The interfaces were configured to use jumbo frames (MTU size of 9000 bytes). All network interfaces were connected to a Cisco 6509 switch, which provided connectivity to the clients.

An MTU size of 9000 was set for all connections to the switch. Each load generator was connected to the network via a single 1 GigE port, which was configured with 4 separate IP addresses on separate subnets.

| Item No | Qty | Type | Description | Processing Function |

|---|---|---|---|---|

| 1 | 4 | CPU | 2.6GHz Dual-Core AMD Opteron(tm) Processor 2218, 2MB L2 cache | Networking, NFS protocol, WAFL filesystem, RAID/Storage drivers |

Each storage controller has two physical processors, each with two processing cores.

| Description | Size in GB | Number of Instances | Total GB | Nonvolatile |

|---|---|---|---|---|

| Storage controller mainboard memory | 8 | 2 | 16 | V |

| Performance Acceleration Module memory | 256 | 2 | 512 | V |

| Storage controller integrated NVRAM module | 2 | 2 | 4 | NV |

| Grand Total Memory Gigabytes | 532 |

Each storage controller has main memory that is used for the operating system and for caching filesystem data. A separate, integrated battery-backed RAM module is used to provide stable storage for writes that have not yet been written to disk.

The WAFL filesystem logs writes and other filesystem modifying transactions to the integrated NVRAM module. In an active-active configuration, as in the system under test, such transacations are also logged to the NVRAM on the partner storage controller so that, in the event of a storage controller failure, any transactions on the failed controller can be completed by the partner controller. Filesystem modifying NFS operations are not acknowledged until after the storage system has confirmed that the related data are stored in NVRAM modules of both storage controllers (when both controllers are active). The battery backing the NVRAM ensures that any uncommitted transactions are preserved for at least 72 hours.

The system under test consisted of two FAS3160 storage controllers housed in a single 6U chassis and 4 SAS-attached storage shelves, each with 24 1TB SATA disk drives. The two controllers were configured in an active-active cluster configuration using the high-availability cluster software option in conjunction with an InfiniBand cluster interconnect on the backplane of the shared chassis. A dual-port gigabit ethernet and a quad-port SAS host bus adapter were present in PCI-e expansion slots on each storage controller. A Performance Acceleration Module was present in a PCI-e expansion slot and enabled with default settings on each storage controller. The storage shelves were were connected to each other via two 3Gbit/s SAS connections. The first and last shelves each had one 3Gbit/s SAS connection to each storage controller. The system under test was connected to a gigabit ethernet switch via 4 network ports (two per storage controller).

All standard data protection features, including background RAID and media error scrubbing, software validated RAID checksumming, and double disk failure protection via double parity RAID (RAID-DP) were enabled during the test.

| Item No | Qty | Vendor | Model/Name | Description |

|---|---|---|---|---|

| 1 | 10 | SuperMicro | SuperServer 6014H-i2 | Workstation with 2GB RAM and Linux operating system |

| 2 | 1 | Cisco | 6509 | Cisco Catalyst 6509 Ethernet Switch |

| LG Type Name | LG1 |

|---|---|

| BOM Item # | 1 |

| Processor Name | Intel Xeon |

| Processor Speed | 3.40 GHz |

| Number of Processors (chips) | 2 |

| Number of Cores/Chip | 2 |

| Memory Size | 2 GB |

| Operating System | RHEL4 kernel 2.6.9-55.0.6.ELsmp |

| Network Type | 1 x Intel 84546GB PCI-X GigE |

| Network Attached Storage Type | NFS V3 |

|---|---|

| Number of Load Generators | 10 |

| Number of Processes per LG | 32 |

| Biod Max Read Setting | 8 |

| Biod Max Write Setting | 8 |

| Block Size | AUTO |

| LG No | LG Type | Network | Target Filesystems | Notes |

|---|---|---|---|---|

| 1..10 | LG1 | 1 | /vol/vol1 /vol/vol2 /vol/vol3 /vol/vol4 /vol/vol5 /vol/vol6 /vol/vol7 /vol/vol8 | N/A |

All filesystems were mounted on all clients, which were connected to the same physical and logical network.

Each load-generating client hosted 32 processes. The assignment of processes to filesystems and network interfaces was done such that they were evenly divided across all filesystems and network paths to the storage controllers. The filesystem data was striped evenly across all disks and SAS channels on the storage backend.

Other test notes: None.

NetApp is a registered trademark and "Data ONTAP", "FlexVol", and "WAFL" are trademarks of NetApp, Inc. in the United States and other countries. All other trademarks belong to their respective owners and should be treated as such.

Generated on Tue Aug 25 09:18:16 2009 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 25-Aug-2009