SPECsfs2008_nfs.v3 Result

|

Dell Inc.

|

:

|

Dell Compellent FS8600 v2 - Single Appliance

|

|

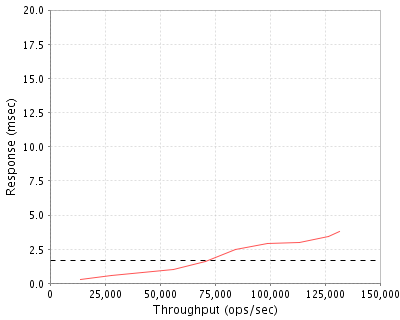

SPECsfs2008_nfs.v3

|

=

|

131684 Ops/Sec (Overall Response Time = 1.68 msec)

|

Performance

Throughput

(ops/sec)

|

Response

(msec)

|

|

14011

|

0.3

|

|

28044

|

0.6

|

|

42013

|

0.8

|

|

56173

|

1.0

|

|

70476

|

1.6

|

|

84315

|

2.5

|

|

98730

|

2.9

|

|

113249

|

3.0

|

|

126760

|

3.4

|

|

131684

|

3.8

|

|

|

Product and Test Information

|

Tested By

|

Dell Inc.

|

|

Product Name

|

Dell Compellent FS8600 v2 - Single Appliance

|

|

Hardware Available

|

July 2013

|

|

Software Available

|

July 2013

|

|

Date Tested

|

May 2013

|

|

SFS License Number

|

55

|

|

Licensee Locations

|

Round Rock, TX

|

Fluid File System (FluidFS) provides scale-out network attached storage (NAS) leveraging Dell's primary storage arrays for backend block storage. FluidFS is a fully virtualized, distributed clustered file system that avoids the architectural limitations of traditional scale-up file systems. It scales performance, capacity and block vs. file needs independently while maintaining industry-leading TCO. High performance is driven by advanced caching algorithms, intelligent front and back-end load-balancing, an advanced metadata architecture and small and large-file optimizations. The FS8600 is a dual controller appliance used in conjuction with Dell Compellent Storage Center to provide CIFS/NFS access to a single virtualized pool of storage.

Configuration Bill of Materials

|

Item No

|

Qty

|

Type

|

Vendor

|

Model/Name

|

Description

|

|

1

|

1

|

NAS Appliance

|

Dell

|

NAS-FS8600-10GBE

|

FS8600 10GbE dual controller NAS appliance

|

|

2

|

2

|

Storage Controller

|

Dell

|

CT-SC8000-BASE

|

SC8000 storage controller

|

|

3

|

4

|

Disk Enclosure

|

Dell

|

EN-SC220-1235

|

SC220 2U 24 2.5" drive 6Gb SAS enclosure

|

|

4

|

4

|

Fibre Channel Card

|

Dell

|

IO-F8X2S-E-LP-D

|

2-port 8Gb FC low profile PCI-E card

|

|

5

|

4

|

SAS Card

|

Dell

|

IO-SAS6X4S-E2-D

|

4-port 6Gb PCI-E SAS card

|

|

6

|

6

|

Disk

|

Dell

|

400GB SLC SSD

|

400GB SLC SSD 2.5" Drive

|

|

7

|

30

|

Disk

|

Dell

|

1.6TB eMLC SSD

|

1.6TB eMLC SSD 2.5" Drive

|

|

8

|

1

|

Software

|

Dell

|

SW-CORE-BASE

|

Storage Center base software license

|

|

9

|

3

|

Software

|

Dell

|

SW-CORE-EXP

|

Storage Center software expansion license

|

|

10

|

1

|

Software

|

Dell

|

SW-DAPR-BASE

|

Data Progression base license

|

|

11

|

3

|

Software

|

Dell

|

SW-DAPR-EXP

|

Data Progression expansion license

|

|

12

|

2

|

Fibre Channel Switch

|

Brocade

|

300

|

24 port 8Gb FC switch

|

Server Software

|

OS Name and Version

|

FluidFS v2.0.7

|

|

Other Software

|

SCOS 6.4-pre

|

|

Filesystem Software

|

FluidFS

|

Server Tuning

|

Name

|

Value

|

Description

|

|

Storage Center volumes write cache

|

disabled

|

Disable write cache for SAN volumes presented from Storage Center

|

|

Storage Center datapage size

|

512KB

|

Page size for Storage Center volumes

|

Server Tuning Notes

N/A

Disks and Filesystems

|

Description

|

Number of Disks

|

Usable Size

|

|

Storage Center #1

|

36

|

19.6 TB

|

|

Total

|

36

|

19.6 TB

|

|

Number of Filesystems

|

1

|

|

Total Exported Capacity

|

19.5TB

|

|

Filesystem Type

|

FluidFS v2.0.7

|

|

Filesystem Creation Options

|

Default NAS Volume with Unix security style

|

|

Filesystem Config

|

The SC8000 Storage Center system was configured with 36 drives, 6 SLC and 30 eMLC drives, configured in one disk pool. The SC8000 presented four 5TB LUNs to the FS8600 appliance.

|

|

Fileset Size

|

16402.4 GB

|

Storage Center SAN volumes were configured with defaults except changing the page size to be 512KB and were thinly provisioned. Data was configured to be written to the default write level of RAID10.

Network Configuration

|

Item No

|

Network Type

|

Number of Ports Used

|

Notes

|

|

1

|

10GbE with jumbo frames

|

4

|

10GbE SFP+ copper

|

Network Configuration Notes

All four of the 10GbE Ethernet ports were connected to the client network for client traffic.

Benchmark Network

Each load client had two ports connected to a single switch. Each client communicated with the FS8600 cluster via two subnets. The FS8600 cluster presented one NFS export from one NAS volume through all NAS controllers on all client network ports on both subnets.

Processing Elements

|

Item No

|

Qty

|

Type

|

Description

|

Processing Function

|

|

1

|

4

|

CPU

|

Intel Xeon Quad-Core. 2.40GHz

|

FS8600 NAS processors (NFS, file system, networking)

|

|

2

|

4

|

CPU

|

Intel Xeon Six-Core 2.5GHz

|

SC8000 SAN processors (SCSI, FC, SAS, RAID)

|

Processing Element Notes

Each NAS appliance has two controllers and each NAS controller has two quad-core processors. Each SAN controller has two six-core processors.

Memory

|

Description

|

Size in GB

|

Number of Instances

|

Total GB

|

Nonvolatile

|

|

FS8600 memory backed by integrated BPS system

|

24

|

2

|

48

|

NV

|

|

System memory and read cache for SC8000 controllers

|

16

|

2

|

32

|

V

|

|

Grand Total Memory Gigabytes

|

|

|

80

|

|

Memory Notes

Stable Storage

The write cache memory within each FS8600 is protected by internal battery and mirrored to a peer before write operations are acknowledged back to NFS clients. Write cache in the SC8000 is normally protected by a capacitor backed cache card, but write cache was disabled for this testing meaning all writes were commited to disk before being acknowledged..

System Under Test Configuration Notes

System under test consisted of one FS8600 appliance in a cluster (an appliance having two controllers) providing a single distributed file system leveraging block storage from a single SC8000 Compellent Storage Center. The block storage space from the SC8000 was combined into a single NAS pool and one NFS export was created on one NAS volume. Client connections were equally distributed across both NAS controllers in the FS8600 cluster.

Other System Notes

Test Environment Bill of Materials

|

Item No

|

Qty

|

Vendor

|

Model/Name

|

Description

|

|

1

|

8

|

Dell

|

R620

|

Dell 1U server dual Quad-Core 2.9GHz 64GB RAM

|

|

2

|

1

|

Dell

|

S4810

|

Dell Force10 48-port 10GbE Switch

|

Load Generators

|

LG Type Name

|

Linux clients

|

|

BOM Item #

|

1

|

|

Processor Name

|

Intel Xeon Quad-Core E5-2690

|

|

Processor Speed

|

2.90

|

|

Number of Processors (chips)

|

2

|

|

Number of Cores/Chip

|

4

|

|

Memory Size

|

64 GB

|

|

Operating System

|

CentOS 6.3 2.6.32-279.11.1.el6.x86_64

|

|

Network Type

|

Intel X520 dual-port 10GbE

|

Load Generator (LG) Configuration

Benchmark Parameters

|

Network Attached Storage Type

|

NFS V3

|

|

Number of Load Generators

|

8

|

|

Number of Processes per LG

|

64

|

|

Biod Max Read Setting

|

2

|

|

Biod Max Write Setting

|

2

|

|

Block Size

|

256

|

Testbed Configuration

|

LG No

|

LG Type

|

Network

|

Target Filesystems

|

Notes

|

|

1..8

|

Linux Client

|

1

|

/spec/nfs

|

Export on subnet A

|

|

1..8

|

Linux Client

|

2

|

/spec/nfs

|

Export subnet B

|

Load Generator Configuration Notes

All eight load generators functioned equally to write to one NFS export across two 10GbE ports each defined on two separate subnets.

Uniform Access Rule Compliance

Each LG hosted 64 processes which accessed the single file system utilizing all FluidFS NAS controllers and NICs in an even manner. The FluidFS file system is distributed evenly across all NAS controllers and Storage devices.

Other Notes

Block size for NFS mount configured to 256KB.

Config Diagrams

Generated on Thu May 30 11:27:47 2013 by SPECsfs2008 HTML Formatter

Copyright © 1997-2008 Standard Performance Evaluation Corporation

First published at SPEC.org on 28-May-2013