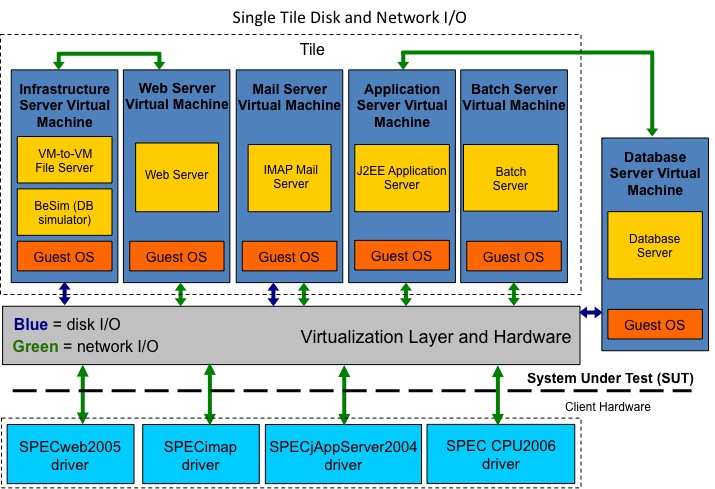

SPEC virt_sc ® 2013 is designed to be a standard method for measuring a virtualization platform's ability to manage a server consolidation scenario in the datacenter and for comparing performance between virtualized environments. It is intended to measure the performance of the hardware, software, and application layers in a virtualized environment. This includes both hardware and virtualization software and is intended to be run by hardware vendors, virtualization software vendors, application software vendors, academic researchers,and datacenter managers. The benchmark is designed to scale across a wide range of systems and is comprised of a set of component workloads representing common application categories typical of virtualized environments.

Rather than offering a single benchmark workload that attempts to approximate the breadth of consolidated virtualized server characteristics found today, SPEC virt_sc uses a four-workload benchmark design: a webserver, Java application server, a mail server, and a batch server workload. The four workloads of which SPEC virt_sc is composed are derived from SPECweb2005, SPECjAppServer2004, SPECmail, and SPEC CPU2006. All four workloads drive pre-defined loads against sets of virtualized machines (VMs). The SPEC virt_sc harness running on the client side controls the workloads and also implements the SPECpower methodology for power measurement. The benchmarker has the option of running with power monitoring enabled and can submit results to any of three categories:

As with all SPEC benchmarks, an extensive set of run rules govern SPEC virt_sc disclosures to ensure fairness of results. SPEC virt_sc results are not intended for use in sizing or capacity planning. The benchmark does not address multiple host performance or application virtualization.

The benchmark suite consists of several SPEC workloads that represent applications that industry surveys report to be common targets of virtualization and server consolidation. We modified each of these standard workloads to match a typical server consolidation scenario's resource requirements for CPU, memory, disk I/O, and network utilization. The SPEC workloads used are:

We created an additional workload called SPECpoll. SPECpoll sends and acknowledges network pings to all VMs in the 0% load phase (active idle) during power-enabled runs.

We researched datacenter workloads and determined suitable load parameters. We refined the test methodology to ensure that the results scale with the capabilities of the system. The benchmark requires significant amounts of memory (RAM), storage, and networking in addition to processors on the SUT. Client systems used for load generation must also be adequately configured to prevent overload. Storage requirements and I/O rates for disk and networks are expected to be non-trivial in all but the smallest configurations. The benchmark does not require that each workload have a maximum number of logical (hardware-wise) processors and is designed to run on a broad range of single host systems.

The benchmark presents an overall workload that achieves the maximum performance of the platform when running one or more sets of Virtual Machines called tiles.

Scaling the workload on the SUT consists of running an increasing

number of tiles. Peak performance is the point at which the addition

of another tile (or fraction) either fails the Quality of Service

(QoS) criteria or fails to improve the overall metric.

| Rate Metric | Target Value |

New Allowance |

Original Allowance |

|---|---|---|---|

| Vehicle Purchasing Rate | 665 | +/-3% | +/-2.5% |

| Large Order Vehicle Purchase Rate | 350 | +/-7% | +/-5% |

| Regular Vehicle Purchase Rate | 315 | +/-6% | +/-5% |

| Adds: |

|---|

| CLOCK_SKEW_ALLOWED |

| IGNORE_CLOCK_SKEW |

| POLLING_RMI_PORT |

| Overwrites: |

|---|

| CLIENTS |

| RUN_SECONDS |

| WARMUP_SECONDS |

| RAMPUP_SECONDS |

| SIMULTANEOUS_SESSIONS |

| BEAT_INTERVAL |

| MAX_OVERTHINK_TIME |

At the time the specweb prime client class is invoked by the clientmgr process, it also may be passed the following additional command line parameters:

The parameters for the first two bullets are always included and are

provided by the prime controller based on the parameters in

Control.config. The last bulleted item is optional and must be

specified in the workload's PRIME_APP value, if desired. For example:

PRIME_APP[1]

= "-jar specweb.jar -lh eth2hostname"

The most significant change to the SPECweb2005 Support workload for SPEC virt_sc was to the fileset characteristics. The Support workload was revised to represent a larger website with file sizes more representative of software downloads and multimedia files currently found on many support sites. The access patterns have been altered by the combination of a smaller zipf alpha value, an increased number of download files per directory and changes to the frequency distributions for accessing those files. SSL and TLS have also been enabled as websites now commonly use HTTPS to secure their users' data.

Following are the changes to the parameter values in

SPECweb_Support.config related to these changes:

| Property | New value | Original value |

|---|---|---|

| ZIPF_ALPHA | 0.55 | 1.2 |

| DIRSCALING | 0.1 | 0.25 |

| NUM_CLASSES | 7 | 6 |

| CLASS_0_DIST CLASS_1_DIST CLASS_2_DIST CLASS_3_DIST CLASS_4_DIST CLASS_5_DIST CLASS_6_DIST |

0.117 0.106 0.264 0.203 0.105 0.105 0.100 |

0.1366 0.1261 0.2840 0.2232 0.1250 0.1051 N/A |

| DOWNLOADS_PER_DIR | 24 | 16 |

| CLASS_4_FILE_DIST CLASS_5_FILE_DIST CLASS_6_FILE_DIST |

"0.575, 0.425" "0.350, 0.220, 0.115, 0.100, 0.100, 0.115" "0.475, 0.525" |

1.000 1.000 N/A |

| CLASS_2_FILE_SIZE CLASS_3_FILE_SIZE CLASS_4_FILE_SIZE CLASS_5_FILE_SIZE CLASS_6_FILE_SIZE |

"1048576, 256001" "2097154, 1048573" "3524287, 428901" "4302606, 904575" "35242871, 3904575" |

"1048576, 492831" "4194304, 1352663" "9992929, 0" "37748736, 0" N/A |

Also, because the web server file sets for each tile would otherwise

contain identical data, the Wafgen file set generator was modified to

add values to the data unique to each workload tile, and the SPECweb

response validation process now checks for these unique values when it

validates the file contents.

The parameter OVERTHINK_ALLOW_FACTOR was also added in order to loosen

the client excess think time limits (MAX_OVERTHINK_TIME) on the

client. MAX_OVERTHINK_TIME is now calculated as the RUN_SECONDS times

the OVERTHINK_ALLOW_FACTOR. So client-caused delay in sending requests

of up to 1% of the total run time is now allowed.

SSL and TLS support has also been enabled in the Support workload for

SPEC virt_sc by setting USE_SSL = 1 in SPECweb_Support.config. This

feature is required for compliant SPEC virt_sc benchmark runs.

Requests for polling data sent by the prime controller

return the same polling data as the regular SPECweb2005 workload and

is of the format:

<System

Poll Time>,<Page Requests>,<Pass Count>, <Fail

Count>,<Error Count>,<Total Bytes>, <Response

Time>,<Time Good>,<Time Tolerable>, <Time

Fail>,<Min Resp Time>,<Max Resp Time>, <Non-client

close>

See the SPECweb2005 documentation for further information on these

values.

The IMAP mail server workload is based loosely on the SPECmail

benchmark. The IMAP component of SPEC virt_sc simulates the load

generated by 500 mail clients (for a compliant full tile) performing

common mailserver activities such as checking for new messages,

fetching, sending, and deleting messages, searches for particular mail

text, etc. The mailserver is pre-populated for each client user

using a provided mailstore generation application (see the Mailserver

VM Setup section of the User Guide for details). For ease of

benchmarking, the benchmark maintains a persistent state from

benchmark run to run, meaning that there are the same number of total

messages at the beginning of each run, with each message maintaining

the same initial state (e.g., SEEN versus UNSEEN). The working

size of the mailstore requires approximately 12GB of storage space

(excluding ancillary mailserver folder structures, indices, etc).

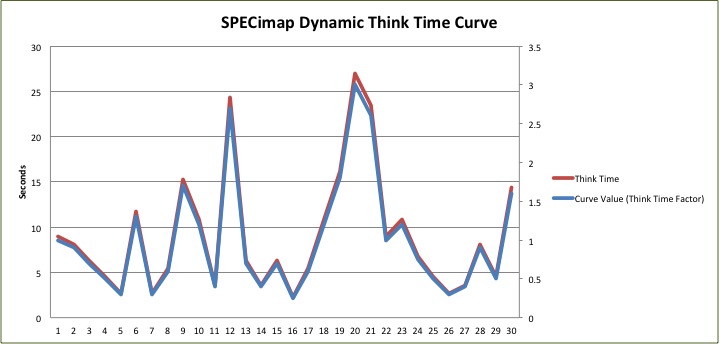

In order to simulate the dynamic nature of daily mailserver activity,

the IMAP workload intensity for the 500 active users is dynamic over

the measurement interval. While the 500 users will all be active

during the measurement interval, the average delay between IMAP

requests changes based on a preset ‘think time curve’. The

average think time for primary IMAP operations over the entire

Measurement Interval is 9 seconds. However, depending on the current

position on the dynamic curve, the average think time at any given

point varies from a minimum of 2.25 seconds to a maximum of 27

seconds. Each tile will begin at a point in the curve which is

offset from the previous tile by 7 curve point values. This is

to prevent an artificial overlap of peaks and troughs of workload

activity. Each point on the think time curve will execute for 20

seconds, which is the default “step rate” for the dynamic curve.

Each tile will traverse the think time curve every 10 minutes. (

30 curve points, 20 seconds per point = 600 seconds)

There are two mail folders that are used during the test run.

The first is the common top level 'INBOX' which contains ~2000

pre-populated mail messages, 70% of which have been seen (SEEN flag is

set) and 30% are unseen (UNSEEN flag is set). The second folder

is the SPEC folder which is used to store the messages that are

created during the test run. The messages that accumulate in

this mailbox are occasionally deleted during the test run and are

always automatically deleted prior to the beginning of the test run to

maintain a consistent mailstore state from run to run.

The workload consists of four 'primary' IMAP operations: create new mail message (APPEND), retrieve mail message (FETCH_RFC822), text search subject header (SEARCH_ALL_SUBJECT), and check for new messages in the 30 most recent messages (SEARCH_UNSEEN). The user chooses a pseudo-random number to determine which primary operation to execute next based on the following transaction mix. The allowed mix variations due to the nature of random selection are also shown below (i.e., the variance allowed of the final measured mix per IMAP operation over all transactions that occurred during the POLLING_INTERVAL).

|

Primary IMAP command mix

|

|||

|---|---|---|---|

| IMAP Command Type | Min. Allowed | Max.

Allowed |

|

| APPEND |

26.31% | 26.71% | |

| FETCH_RFC822 |

66.79% | 67.81% | |

| SEARCH_ALL_SUBJECT

|

3.25 | 3.43% | 3.60% |

| 1.95% | 2.16% | ||

Each of the primary operations may trigger one or more secondary

operations as described below.

Secondary APPEND operations:

The deletion of all accumulated messages in the SPEC folder

(STORE_ALL_FLAGS_DELETED, followed by EXPUNGE) occurs for 2% of the

APPEND primary operations.

Secondary FETCH operations:

Since 30% of the mailstore messages have the UNSEEN flag set, 30% of

FETCH operations reset this flag after the FETCH operation to UNSEEN

(UID_STORE_NUM_UNSET_FLAGS_SEEN) in order to maintain the consistent

mailstore state.

Secondary SEARCH_UNSEEN operations:

The SEARCH_UNSEEN operation represents the typical IMAP client

application that is often active throughout the workday and

periodically checks the IMAP server for new messages. Therefore,

each SEARCH_UNSEEN have a corresponding login (IMAP_LOGIN), a logout

(IMAP_LOGOUT). Additionally, for every new message (flag set to

UNSEEN) that is found in the most recent [10..30] mailstore messages,

the message header is fetched using the PEEK IMAP command

(FETCH_NUM_RFC822HEADER)

The allowed mix variations due to the nature of random selection are

also shown below (i.e., the final mix determined at the end of the run

over all transactions that occurred during the POLLING_INTERVAL). The

percentage of allowed variation between the measured and the minimum

and maximum mix is increased for secondary operations which occur at a

low frequency.

Requests for polling data sent by the prime controller

return a set of comma-delimited metrics in the following format:

<System

Poll Time>,<Total Count>,<Pass Count>,<Fail

Count>,<Error Count>,<Total Resp. Time>,<Min Resp.

Time>,<Max Resp. Time>

The *Count values are the total IMAP command counts from the beginning

of the polling period. The 'Total Resp. Time' is the sum of the

response time (in milliseconds) for all IMAP commands.

The batch server is intended to represent a VM that is idle most of

the time but has occasional spikes of activity that require moderate

amounts of processing resources that can be provided at lower priority

compared to others. The batch server uses one of the modules from the

SPEC CPU2006 SPECint suite, 401.bzip2, as the batch workload. Ten

copies of the 401.bzip2 "train" workload must be run within a

specified time in order to satisfy the requirements for this workload.

The batch workload does not have a metric that contributes to the

overall SPEC virt_sc metric, but rather returns a PASS/FAIL result;

as long as the entire batch workload completes in the specified time,

it passes.

As with the other workloads, the specbatch class implements the

PrimeRemote interface and starts an RMI listener to listen for RMI

commands from the prime controller.

Like other workloads, the SPECbatch prime client, specbatch, uses two primary threads: one to listen for and respond to prime controller RMI calls and the other for workload execution. The SPECbatch RMI listener thread is similar in its implementation to all other workloads. The workload execution thread for the specbatch prime client launches a workload run script, defined by BATCH_SCRIPT in SPECbatch/Test.config, on the host VM with two parameters:

For a multi-tile run, the specbatchclient process launches the

workload script at different times on each tile depending on the tile

ID. The first tile's workload script is launched as soon as the

polling phase of the benchmark begins. The second tile's workload is

launched a number of seconds later defined by the OFFSET parameter in

Test.config, the third tile's workload is launched OFFSET seconds

after the second tile's, and so on. If the number of tiles run exceeds

the value OFFSET_RESET_TILECNT (defined in Test.config), the first

tile after this value starts its workload at the beginning of the

polling phase and the offsets for subsequent tiles are staggered from

this time. This pattern repeats for each multiple of

OFFSET_RESET_TILECNT. For a compliant run, OFFSET must equal 900 (15

minutes) and OFFSET_RESET_TILECNT must equal 4.

After period of time - defined by TIMEINTERVAL in

SPECbatch/Test.config - from the start of the last workload script

invocation, the next workload script invocation begins. For a

compliant benchmark run, TIMEINTERVAL must equal 3600.

Once the workload execution thread launches the workload script, it

monitors the status of the script and collects the results at the end

of the benchmark. If the workload script runs longer than the expected

value, defined by DURATION in Test.config, the workload fails

validation.

The SPECbatch workload is based on the SPEC CPU2006 harness. As such,

certain steps must be followed in order to prepare the SPECbatch

workload for execution. The SPECbatch workload only uses a subset of

the SPEC CPU2006 harness and therefore cannot be used for compliant

SPEC CPU2006 measurements.The SPEC CPU2006 harness provides the

workload as source code, so it must be compiled prior to its use.

Therefore a compiler must be available on the VM to accomplish this.

A batch run script must be created to control the execution of the

SPECbatch workload. There are sample run scripts that are included in

the kit that provide guidance in what is needed for such a script.

Please refer to the next section for more details.

Once the workload executable and run script have been built, the

SPECbatch workload is ready for use.

The SPECbatch workload run script controls the manner in which the required number of 401.bzip2 workload executions are handled. The script is passed two parameters from the specbatch process, the run-specific results directory and BATCH_COPY_COUNT. The script then needs to accomplish four basic functions in order to achieve a compliant result:

As stated before, there are sample workload run scripts provided in

the SPECbatch folder that can be used directly or as models for custom

scripts. The script must be run from the SPECbatch/cpu2006-virt

folder. There is a symbolic link in the SPECbatch/cpu2006-virt folder

pointing to one of the sample workload scripts. The default value in

Control.config points to this symbolic link.

The workload run script is platform dependent, so the specbatch

process invokes the script using the shell environment defined by

BATCH_RUN_ENVIRONMENT in the SPECbatch/Test.config.

Requests for polling data sent by the prime controller

return a string in the following format:

When the getData() request is received from the prime controller on

the RMI listening thread, the SPECbatch prime client sends a

getHeartbeat() RMI request to specbatchclient, which specbatchclient

relays to the target VM. The format of the returned string of

comma-separated variables is:

<System

Poll Time>,<Heartbeats>,<Total Beats>,<Resp.

Msec>,<Min. Msec>,<Max. Msec>,<Total

Msec>,<QOS Pass>,<QOS Fail>

Please refer to the SPECpoll polling section for details on these

values.

SPECpoll is used to poll the server VMs to confirm that they are running and responsive. While not a workload it behaves like the other workloads. It must implement the PrimeRemote interface for the prime controller to be able to communicate with it through RMI. Beyond implementing this common communication interface, the SPECpoll process fundamentally just waits for and responds to polling commands from the prime controller.

There are three jar files used for SPECpoll: specpoll.jar,

specpollclient.jar, and pollme.jar. The first two provide the prime

client and client interface to the workload common to all workloads in

the benchmark client harness. The latter jar file, pollme.jar, is not

used on the client side, but must be installed and running on all VMs

so that it can listen for and respond to polling requests from

specpollclient.

SPECpoll's primary function is to poll all VMs during an active idle

measurement interval. It is in order to provide this function that the

SPECpoll process must be installed on each set of client systems that

host the four workloads. Specifically, during an active idle

measurement interval, the prime client goes to the same set of

PRIME_HOSTs and WORKLOAD_CLIENTS defined in Control.config for the

loaded measurement interval, but instead of starting the mail, web,

batch, and appserver workloads, it starts the SPECpoll process.

SPECpoll prime client (specpoll) uses two primary threads: one to

listen for and respond to prime controller RMI calls and the other for

process execution. The SPECpoll RMI listener thread is similar in its

implementation to the four workloads. The process execution thread for

the specpoll prime client is as simple of a process execution sequence

as is possible for this virtualization benchmark and consists of the

following sequence of steps:

At first glance it might appear as though this process does nothing at all since the process execution thread sleeps during the ramp-up, warm-up, and runtime phases of the four workloads. However, VM polling is only required in response to a prime controller RMI command, getData(), which is executed on the RMI listener thread that listens for RMI commands from the prime controller. So unlike the four workloads that drive load during a run interval, there is nothing more that this "primary" process execution thread needs to do during these periods other than to wait until these phases have expired.

Like the SPECpoll prime client process, the SPECpoll client process (specpollclient) is a minimal client process implementation. In addition to maintaining and returning the metric results (common to all workload client processes), specpollclient has two primary methods: setConfig() and getHeartbeat().

The setConfig() method checks the QOS metric values used in the

configuration object passed to it by the specpollclient and gets the

target host VM and RMI port. It then checks whether a second host VM

name:port pair was passed to it. If so, when it makes the setConfig()

RMI call to the target VM's pollme process, it passes that name:port

pair to the target VM's SPECpoll listener. Once this is done, the

target VM has all of the information it neeeds to respond to

getHeartbeat() RMI requests.

When the specpollclient process receives a getHeartbeat() request from

the specpoll prime client, it forwards this request to its

corresponding SPECpoll listener and processes the data returned. It

then returns the results to the SPECpoll prime client, prefixing the

data with the specpollclient's system time, measured after receiving

the getHeartbeat() response from the target VM.

The SPECpoll listener (pollme) runs on all VMs with which the SPECpoll clients are expected to send polling requests. The pollme listener, after being invoked and setting itself up to listen on the specified network interface and port, simply waits for setConfig() and getHeartbeat() RMI calls.

Before receiving a getHeartbeat() RMI call, the SPECpoll client first

needs to send a setConfig() command to the pollme listener. If the

listener is expected to relay a getHeartbeat() RMI call to a backend

server, this backend host name and listening port are passed in the

setConfig() RMI call. Tthe pollme listener uses the host VM name

parameter sent with the setConfig() RMI call to set up an RMI

connection to that host name and port for relaying future

getHeartbeat() RMI calls.

When a getHeartbeat() RMI call is received from the SPECpoll client by

the SPECpoll listener, it checks whether it needs to relay the

getHeartbeat() RMI call to a backend server, and if so, makes its own

getHeartbeat() RMI call to the backend server. Each getHeartbeat() RMI

call returns one "heartbeat" along with however many heartbeats are

returned by any relayed getHeartbeat() call to a backend server. So

for the mailserver and batchserver that have no backend server, these

calls return "1" and for the webserver and application servers that

have backend servers, these getHeartbeat() RMI calls return "2" to the

SPECpoll client.

These SPECpoll listeners have no concept of a benchmark begin and end

time. They simply remain listening on the network interface and port

on which they were started, waiting for RMI commands until these

processes are terminated manually. The client harness does

not stop or start these listening processes on the VMs.

During an active idle measurement phase, requests for polling data

sent by the prime controller return a string in the following

format.

When the prime controller receives the getData() request from on the

RMI listening thread, the SPECpoll prime client sends a getHeartbeat()

RMI request to specpollclient, which specpollclient relays to the

target VM. The format of the returned string of comma-separated

variables is:

<System

Poll Time>,<Heartbeats>,<Total Beats>,<Resp.

Msec>,<Min. Msec>,<Max. Msec>,<Total

Msec>,<QOS Pass>,<QOS Fail>

The client harness controls the four modified SPEC benchmark workloads. The workload modifications that change the behavior of the workload are explained in more detail in previous sections of this guide. This section focuses on how the client harness controls these workloads and the modifications made to these workloads that allow for this control and coordination.

Each workload is required to implement the PrimeRemote interface.

This interface provides the names of the RMI methods that the prime

controller expects any workload to be able to execute in order to

ensure correct coordination of execution of these workloads.

Correspondingly, each workload can rely on the availability of the RMI

methods listed in the SpecvirtRemote interface for the prime

controller. These methods are also a part of the coordination

mechanism between the prime controller and the workloads. A listed

description of these methods is provided in previous sections of this

guide.

Below is the sequence of events that occur between the prime

controller and the workloads during benchmark run execution. (This

sequence assumes the client manager (clientmgr) processes have been

started for each workload prime client and for the corresponding

workload client processes. It also assumes that the pollme processes

have been started on the VMs and are listening on their respective

ports, as well as any power/temperature daemon (PTDaemon) processes

used to communicate with power or temperature meters. Upon starting

the specvirt process:

On both the prime controller and on each of the prime clients there are typically two separate threads engaged in different tasks. For the prime controller, one thread is primarily responsible for listening for and responding to RMI calls ,and the other is primarily responsible for controlling prime client execution. On the prime clients, similarly there is a thread primarily tasked with listening for and responding to RMI calls from the prime controller, and a second thread is responsible for coordinating its workload run with its workload clients.

The following flow diagram illustrates the sequence of interactions

between these threads:

<tile>,<wkload>,<prime_client_timestamp>,<

workload-specific CSV data>

If there is power-related data (i.e. if USE_PTDS =1), then

power/temperature polling data is also recorded following the workload

polling data in the CSV format:

PTD[n],<timestamp>, <PTDaemon type-specific CSV data>

After the polling interval is complete and all of this polling data

has been collected and recorded, all configuration validation or

runtime errors for this interval are collected by the prime controller

and recorded following the polling data in the format:

ERR-<run_interval>-<tile>-<wkload>-<error_number>

= <error string>

Next recorded in the result raw file are the aggregate runtime

results, starting with the workload-specific compliance, throughput,

and QoS metrics in the format:

<run_interval>-PRIME_CLIENT.COMPLIANCE[<tile>][<wkload>]

= <true | false>

<run_interval>-PRIME_CLIENT.METRIC_VALUE[<tile>][<wkload>]

= <value>

<run_interval>-PRIME_CLIENT.QOS_VALUE[<tile>][<wkload>]

= <value>

Immediately following this data are the load levels used during the

run interval, reported in the format:

<run_interval>-PRIME_CLIENT.LOAD_LEVEL[<tile>][<wkload>]

= <value>

Following these values are the workload-specific submetric values.

Because there are multiple submetric types as well as submetric

values, the multiple values are listed in CSV format and the multiple

types of workload-specific submetric data are distinguished by

separate indexes. The first line (type index 0), for each workload is

reserved for the workload-specific submetric labels, and these are

recorded in the format:

<run_interval>-PRIME_CLIENT.SUBMETRIC_VALUE[<tile>][<wkload>][0]

= "<workload-specific CSV labels>"

This line is required in order to support the workload-agnostic

architecture of the prime controller. If a new workload was

added (or one was replaced), changing these labels is all that is

required in order for the prime controller to be able to support a

different set of workload submetrics.

Following this submetric label for the tile and workload is that

workload's request type-specific data in the format:

<run_interval>-PRIME_CLIENT.SUBMETRIC_VALUE[<tile>][<wkload>][<req_type>]

= "<workload-specific CSV values>"

The number of CSV labels in the 0-indexed SUBMETRIC_VALUE must match

the number of CSV values contained in all of the greater-than-0

request type indexes that follow for that workload. Also, the number

of submetric request types are not required to be identical for all

workloads. For example, the jApp workload and the mail workload each

have two request types (manufacturing and dealer for jApp, and append

and fetch for mail) and therefore two request type indexes (1 and 2),

while the web and batch workloads each have only one request type

(support and heartbeats, respectively) and therefore only one request

type index (1).

The final set of aggregate data are the power-related measurement

data. For power meters, the data collected is the watts, volts, amps,

and power factor. For temperature meters, it collects the temperature

and humidity. This data is of the format:

<run_interval>-

PTD[n][<data_type>] = "<PTDaemon data type-specific CSV

values>"

This data is followed by a newline character, a string of dashes, and

another newline character. If there is more than one run interval, the

same data from the next run interval is recorded.

Once all run intervals have completed, the runtime configuration values are recorded in the result file. These included the configuration properties from the Control.config and Testbed.config files as well as all configuration properties created by the prime controller during the benchmark run. One example of the controller-generated configuration properties is the RUN_SECONDS properties. These are calculated and set by the prime controller for each workload to ensure it meets the specified POLL_INTERVAL_SEC value.

Once all of the above data has been recorded in the results file, the prime controller takes all of this information and encodes and appends it to the end of the file. This provides an ability to compare the original post-run configuration with any post-run editing done on this file, and this capability is used by the reporter in order to ensure that only allowed field editing is added to any submission file created using the reporter.

The SPEC virt_sc reporter is used to create result submission files from raw files, regenerate raw files after editing them post-run, and to create formatted HTML run result pages. It is invoked automatically by the prime controller to create the formatted HTML page at the end of a run, but must be run manually to generate submission files or to regenerate an edited raw file.

Raw files commonly require post-run editing in order to update, correct, or clarify configuration information. However, only the RESULT_TYPE property in Control.config and the properties in Testbed.config are allowed to be edited in the raw result file. (Many configuration properties in Control.config are editable before a run starts, but cannot be edited afterward.)

The reporter ensures that only editable values are retained in a

regenerated raw or submission file using the following set of

regeneration steps:

Note: In order for the configuration property string comparison to

work correctly cross-platform, any Windows backslashes are converted

to forward slashes before being stored in Hashtables, even for

non-editable fields. Therefore, a regenerated raw file's configuration

properties always contain forward-slash characters in place of

backslash characters, even where backslash characters existed in the

original raw file in post-run non-editable fields.

The reporter concurrently creates the new raw file as well as the

submission (.sub) file when regenerating the raw file except in the

case where a sub file was passed into the reporter rather than a raw

file.

Once a valid raw file and/or submission file have been generated, the

reporter then uses this regenerated raw file to create the

HTML-formatted final report(s). See the section titled Formatted

(HTML) File Generation below for further details.

Any time you invoke the reporter with the parameters used to create a submission file, it assumes an edited raw file has been passed to it and goes through the editing validation process, assuring and preserving only allowed edits of the raw file submitted. Once a valid raw file has been recreated, the reporter then prefixes all non-configuration properties with a # character to preserve them in the submission process, and then prefixes the configuration properties with spec.virt_sc2013. per the SPEC submission tool requirements. This modified file is saved with a .sub extension, identifying it as a submittable raw file.

All invocations of the reporter with the "-s" or "-r" flags result in one or more formatted HTML reports being generated for the corresponding raw file or submission file. There are three different types of HTML-formatted reports the reporter can create depending on the type of benchmark run and the RESULT_TYPE or type parameter value passed to the reporter.

If no PTDaemons were used in the run, then the reporter always

generates only a performance report, regardless of the RESULT_TYPE or

-t value passed to the reporter. The RESULT_TYPE and/or -t parameter

values only control result type generation for benchmark runs that

include power data. The type of report generated is appended to the

html file name in the following formats:

| performance-only: | <raw_file_name>-perf.html |

| server-only perf/power: | <raw_file_name>-ppws.html |

| server-plus-storage perf/power: | <raw_file_name>-ppw.html |