SPECvirt ® Datacenter 2021 Run and Reporting Rules¶

1.0 Introduction¶

The SPECvirt ® Datacenter 2021 benchmark is the third generation SPEC benchmark for evaluating the performance of virtualized environments and the first for measuring the performance of multiple hosts in virtualized datacenters.

As virtualized environments become increasingly complex, it becomes more important to measure the performance of the overall ecosystem including provisioning of new resources, balancing loads across hosts, and overall capacity of each host. The SPECvirt Datacenter 2021 benchmark measures all of these aspects, with particular attention to the hypervisor management layer.

This document specifies how the SPECvirt Datacenter 2021 benchmark is to be run for measuring and publicly reporting performance results. These rules abide by the norms laid down by the SPEC Virtualization Committee and accepted by the SPEC Open Systems Steering Committee. This ensures that results generated with the benchmark suite are meaningful, comparable to other generated results, and are repeatable with sufficient documentation covering factors pertinent to duplicating the results.

Per the SPEC license agreement, all results publicly disclosed must adhere to these Run and Reporting Rules.

2.0 Philosophy¶

The general philosophy behind the rules of the SPECvirt Datacenter 2021 benchmark is to ensure that a result fairly represents the performance of a valid multi-host virtualized solution environment under load, and enough configuration information is provided with the result to allow an interested independent party to reproduce the reported results.

The following attributes are expected for each result:

Proper use of the SPEC benchmark tools as provided.

Availability of an appropriate full disclosure report (FDR).

Furthermore, SPEC expects that any public use of results from this benchmark suite shall be for the SUT (System Under Test) and configurations that are appropriate for public consumption and comparison. Thus, it is also expected that:

The SUT and configuration are generally available, documented, and supported by the vendor(s) or provider(s). See section 4.1.2 System Under Test (SUT).

Hardware and software used to run this benchmark must provide a multi-host environment which provides the capability to provision, configure, and manage the virtualized resources necessary for the datacenter operations exercised by the benchmark.

Optimizations utilized must improve performance for a larger class of workloads than those defined by this benchmark suite, i.e. no “benchmark specials” are allowed.

SPEC requires that any public use of results from this benchmark follow the SPEC Fair Use Rule and those specific to this benchmark in the SPEC Fair Use Rules for SPECvirt Datacenter 2021. In the case where it appears that these rules have not been adhered to, SPEC may investigate and request that the published material be corrected.

You can use any virtualization platform that can perform all the operations the benchmark requires while also complying with the run and reporting rules detailed in this document.

2.1 Fair Use of SPECvirt Datacenter 2021 Results¶

Consistency and fairness are guiding principles for SPEC. To ensure that these principles are met, any organization or individual who makes public use of SPEC benchmark results must do so in accordance with the SPEC Fair Use Rule. Fair Use clauses specific to SPECvirt Datacenter 2021 are covered in the SPEC Fair Use Rules for SPECvirt Datacenter 2021.

2.2 Research and Academic Usage¶

Please consult the SPEC Fair Use Rules on Research and Academic Usage.

2.3 SPECvirt Datacenter 2021 Benchmark Configuration¶

For a SPECvirt Datacenter 2021 result, a pre-configured template image provided as part of the benchmark kit must be used for all client and SUT workload VMs. No modifications can be made to the contents of the VM template. Tuning of the SUT hardware and the hypervisor is allowed, within the rules listed below.

The SPECvirt Datacenter 2021 benchmark provides deployment and control toolsets for VMware’s ESXi v6.7 and Red Hat’s RHV v4.3 hypervisor environments. SPEC acknowledges newer versions of these hypervisors or other hypervisor solutions may require different scripts to support new control interfaces or APIs. See Appendix A if your SUT hypervisor environment requires a new or updated deployment/control toolset to run the benchmark.

2.4 About SPEC¶

2.4.1 SPEC May Adapt Suite¶

SPEC reserves the right to adapt the benchmark codes, workloads, and rules of the SPECvirt Datacenter 2021 benchmark as deemed necessary to preserve the goal of fair benchmarking. SPEC notifies members and licensees whenever it makes changes to this document and renames the metrics if the results are no longer comparable. The version of this document posted on spec.org is considered to be the latest and applies to any submitted results prior to acceptance.

2.4.2 Reference Links Disclaimer¶

Relevant standards are cited in these run rules as URL references, and are current as of the date of publication. Changes or updates to these referenced documents or URLs may necessitate repairs to the links and/or amendment of the run rules. The most current run rules are available at the SPECvirt Datacenter 2021 web site. SPEC notifies members and licensees whenever it makes changes to the suite.

2.4.3 Notes on Mitigation of Significant Security Vulnerabilities¶

Several recent security issues that affected certain hardware systems have been reported to the public. Addressing these security vulnerabilities has had significant performance implications in some cases. Due to heightened awareness of such vulnerabilities and the performance impact of their mitigation, SPEC has required that for each published result prominent statements be provided in the FDR documentation stating whether certain mitigations have been applied to the SUT (see: SPECvirt Datacenter 2021 FAQ). SPEC reserves the right to add to or remove from the list of mitigations that need to be reported in such fashion. However, even if explicit text is not required, it is expected that any software, BIOS, or firmware update made to a system that affects performance still needs to be sufficiently documented to ensure the result can be reproduced and that the reported solution is supported by the benchmark sponsor, hardware vendor(s), or software vendor(s).

3.0 Definitions¶

In a virtualized environment, the definitions of commonly-used terms can have multiple or different meanings. To avoid ambiguity, this section attempts to define terms that are used throughout this document:

Clients: A client is a virtual machine that is used to initiate benchmark transactions and record their completion. In many cases, a client simulates the work requests that would come from end users, although in some cases the work requests come from background tasks that are defined by the benchmark workloads running on the SUT. A client also communicates with the svdc- director to report data used in generating the final benchmark result.

Cluster: A cluster is a grouping of Hosts defined by the hypervisor management software.

Guest: A guest is a synonym for virtual machine.

Host: A host is a server that is used to host virtual machines.

Hypervisor (virtual machine monitor): Software (including firmware) that allows multiple VMs to be run simultaneously on a server.

Management Server: System and/or application used to manage the hosts, storage, and networking resources in a virtualized datacenter. Can be a physical server or a VM.

Server: The server represents a system – physical or virtual – that supports one or more hypervisor instances. The server consists of physical or virtual components such as the processors, memory, network adapters, storage adapters, etc. This definition includes nested virtualization.

SUT: The SUT, or System Under Test, is defined as the hosts and performance-critical components that execute the defined workloads. Among these components are storage, networking, management server, and all other components necessary to connect the hosts to each other and to the storage subsystem.

Testbed: The benchmark testbed consists of all of the components needed to produce a result. The SUT, client drivers and supporting infrastructure, and network components are all part of the testbed.

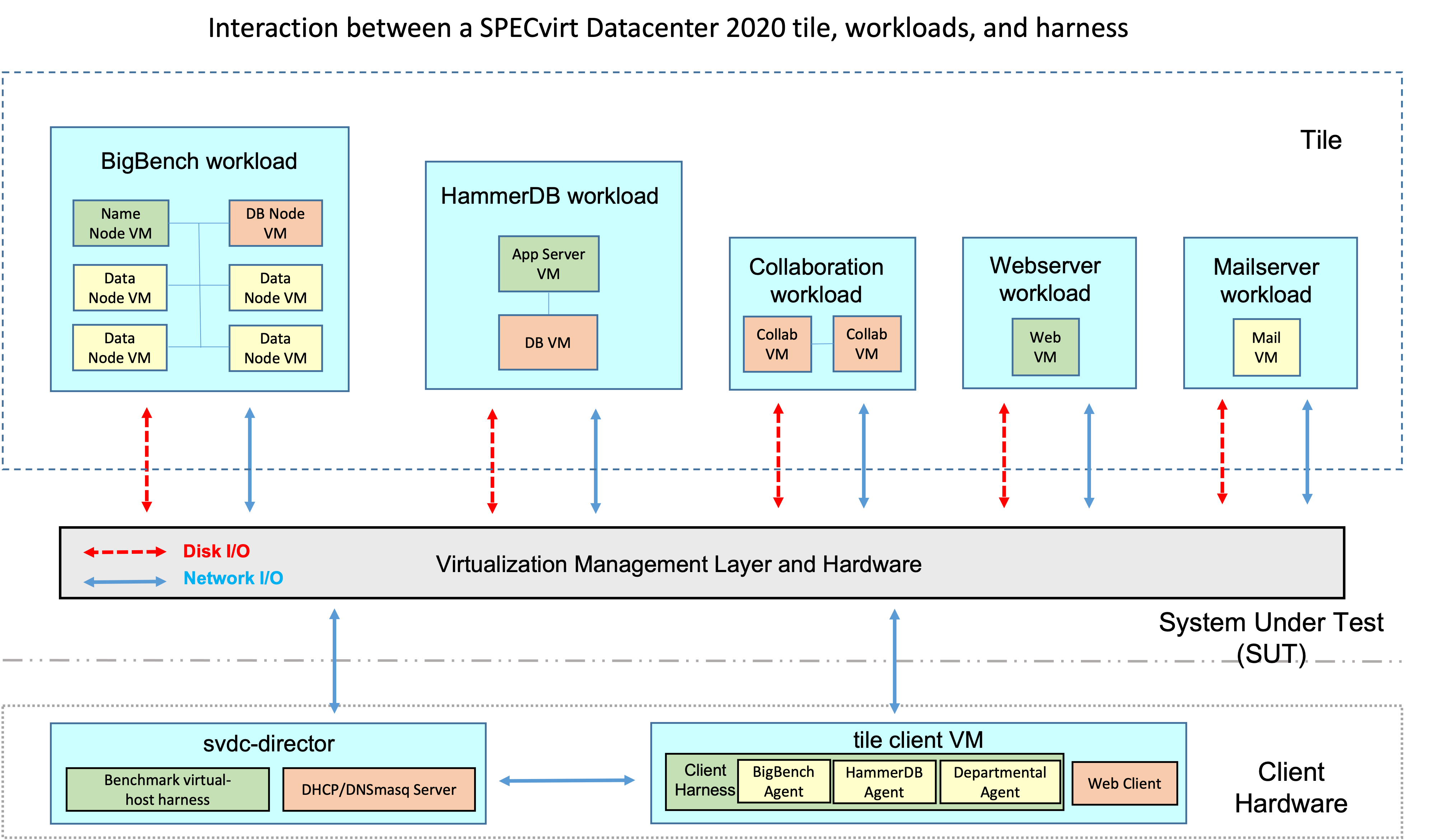

Tile: A single unit of work that is comprised of five workloads that are driven across twelve distinct virtual machines. The load on the SUT is scaled up by configuring additional sets of the 12 instances of VMs as described under Workload below and increasing the tile count for the benchmark.

Virtual Machine (VM): A virtual machine is an abstracted execution environment which presents an operating system (either discrete or virtualized). The abstracted execution environment presents the appearance of a dedicated computer with CPU, memory and I/O resources available to the operating system. In the SPECvirt Datacenter 2021 benchmark, a VM consists of a single OS and the application software stack that supports a single SPECvirt Datacenter 2021 component workload. There are several methods of implementing a VM, including physical partitions, logical partitions, containers, and software-managed virtual machines. For the SPECvirt Datacenter 2021 benchmark, a VM includes all associated VM disks (partitions, images, and virtual disk files) and the VM configuration files.

Workload: A workload is one or more related applications with a pre-defined initial dataset which runs on one or more VMs and consumes resources (CPU, memory, IO) to achieve an expected result. A workload may run on a single guest or multiple guests.

For the SPECvirt Datacenter 2021 benchmark, the workloads and their VMs are:

A departmental workload which simulates the stress of an IMAP mail server application environment based on the SPECvirt_sc2013 mailserver workload. A standalone Mailserver VM runs this workload.

A departmental workload which simulates the stress of a web server environment based on the SPECvirt_sc2013 webserver workload. A Webserver VM runs on the SUT and interacts with a remote process that runs on the client.

A departmental workload which simulates the stress of a pair of collaboration servers interacting with each other, modeled from real-world data. Two Collaboration Server VMs interact with each other to run this workload.

A database server workload based on a modified version of HammerDB that exercises an OLTP environment. The workload utilizes two VMs running on the SUT: a Database Server VM and an Application VM.

A big data workload based on a modified version of BigBench that utilizes an Apache Hadoop environment to execute complex database queries. The workload runs across six VMs on the SUT: a Name Node VM, a Database VM and four Data Node VMs.

For further definition or explanation of workload-specific terms, refer to the respective documents of the original benchmarks.

4.0 Running the SPECvirt Datacenter 2021 Benchmark¶

4.1 Environment¶

The SPECvirt Datacenter 2021 benchmark environment encompasses all the components necessary to support and run the benchmark harness, the system under test (SUT), and the infrastructure connecting the testbed harness to the SUT.

4.1.1 Testbed Configuration¶

These requirements apply to all hardware and software components used in producing the benchmark result, including the SUT, network, and clients.

The testbed infrastructure must conform to the appropriate networking standards, and must utilize variations of these protocols to satisfy requests made during the benchmark.

Any deviations from the standard default configuration for testbed configuration components must be documented so an independent party would be able to reproduce the configuration and the result without further information. These deviations must meet general availability and support requirements (see SPEC Open Systems Group Policies and Procedures for details). The independent party should be able to achieve performance not lower than 95% of that originally reported.

Clients must reside outside of the SUT.

4.1.2 System Under Test (SUT)¶

VMs for each workload type must be configured identically. For example, a svdc-txxx-bbnn VM might be configured with 6 vCPUs and a svdc-txxx-happ VM might use 3 vCPUs, as long as all svdc-txxx-bbnn VMs use 6 vCPU and svdc-txxx-happ VMs use 3 vCPUs.

- Note: The requirement for identical VM configuration excludes such

differences neccessary for unique identification of the VM, for example: hostname, MAC address, IP address, etc.

For a run to be valid, the following attributes must hold true:

The SUT must contain multiples of four hosts (4, 8, 12, …). All hosts must have identical hardware components and be configured with the same software/firmware components and settings.

The SUT’s hardware and software components must be supported and generally available on or before date of publication, or will be generally available within 3 months of the first publication of these results.

No components that are included in the base configuration of the SUT, as delivered to customers, may be removed from the SUT unless recommended by vendor for customer solutions. The intent of this restriction is prohibit the use of configurations that would not be used in a real-world situation, i.e. “benchmark specials.”

The SUT returns the complete and appropriate byte streams for each request made.

The SUT must utilize shared storage for all VMs as described in SUT Storage.

The SUT must utilize stable and durable storage for all VMs as described in SUT Storage.

Note The submitter may be required to provide additional files from the SUT and/or workload VMs during the result review cycle. It is therefore highly recommended that the original SUT and harness from the submission remain available until the review has been completed.

4.1.2.1 Management Server¶

The management server used to manage the cluster containing the hosts running the workload VMs is considered part of the SUT. As such its configuration and HW must be disclosed. The following aspects must hold true for a valid result:

The management server may be either a physical system or a VM hosted on a hypervisor host.

If the management server is a hosted VM:

The HW and SW configuration of the VM’s host must be disclosed

The VM may reside on any of the hosts in the cluster used by the workload VMs

The VM’s virtual disk(s) may reside on the shared storage pool(s) used by the workload VMs

The VM may reside on a host other than the SUT workload hosts.

The VM may reside on a cluster or host used by the testbed’s client drivers. In this case, the HW and SW configuration of the client host running the management server VM must be documented in the corresponding MANAGER.xxx fields in the result report.

- Note: While the management server is considered part of the SUT, this

particular scenario is not deemed to violate the restriction that clients must reside outside of the SUT

4.2 Workload VMs¶

To scale the benchmark workload additional tiles are added. The last tile may be configured as a fractional tile.

If a final full tile cannot be run on the SUT, we allow running additional workloads – another mail, then another web, then another collaboration server, then another HammerDB – until SUT saturation. These additional workloads are called a fractional tile.

All workloads have fixed injection rates which cannot be altered for a valid run.

Each VM is required to be a distinct entity; for example, you cannot run the HDB application server and the HDB database on the same VM. The following block diagram shows the tile architecture and the virtual machine/hypervisor/ driver relationships:

Figure 1. Single tile and harness block diagram

The SPECvirt Datacenter 2021 benchmark requires the use of the provided guest template images to create the client and SUT VMs.

For SPECvirt Datacenter 2021 benchmarks, all virtual machines are created using the template VM provided

with the benchmark kit and deployed using the tools provided in the kit. No modifications within the template image OS or the deployed SUT VMs are allowed.

4.2.1 Departmental Workload VMs (Mailserver, Webserver, Collaboration Server)¶

For the SPECvirt Datacenter 2021 benchmark, a synthetic workload driver called AllInOneBench (AIO) is used for the departmental workloads. AIO is a Java application which can be configured to exert CPU, memory, IO, and network stress to emulate real world applications’ resource utilizations. Some departmental workloads are run using a single stand-alone VM instance, and some workloads are run using multiple driver instances on separate VMs which interact with each other to drive network stress.

The resource patterns selected for the three departmental workloads for the SPECvirt Datacenter 2021 benchmark were derived from measurements of the resource utilization of actual VMs running a pre-defined workload. The SPECvirt Datacenter 2021 departmental workload definitions are fixed and cannot be changed for a valid benchmark result.

The three SPECvirt Datacenter 2021 departmental workloads are:

Mailserver. A single virtual machine is used to represent the resource utilization patterns of an iMap email server. A SPEC virt_sc 2013 mailserver VM under load was used to model this workload.

Webserver. A VM running on the SUT interacts with an application driver running on the tile’s client VM to mimic the utilization patterns of a webserver servicing client page requests. The CPU, memory, IO, and network utilization of a SPEC virt_sc 2013 webserver VM under load was used to model this workload.

Collaboration server. Two VMs run on the SUT and interact with each other to simulate a multi-user collaboration application. Resource patterns from actual customer collaboration servers were used to model this workload.

For SPECvirt Datacenter 2021 configurations, the provided template image is used to dynamically deploy the departmental workload VMs during the benchmark’s execution. No modification to the template OS is allowed.

4.2.2 HammerDB Workload VMs¶

HammerDB is a database load testing and benchmarking tool offering both an online transactional processing (OLTP) workload and an analytical processing (OLAP) workload. The SPECvirt Datacenter 2021 benchmark uses HammerDB’s OLTP workload to simulate multiple virtual users querying against and writing to a relational database. Examples of such workloads include an online grocery ordering and delivery system, online auction, or airline reservation system, all of which require performance and scalability.

The SPECvirt Datacenter 2021 benchmark adds functionality to split the workload into a multi-tier model for emulating networked customer scenarios. The HammerDB database resides on one VM, and the application server resides on another. The provided template is used to configure all of these VMs using scripts included in the template. No modification to the template OS is allowed.

4.2.2 BigBench Workload VMs¶

A modified version of the BigBench specification-based benchmark – which uses an Apache/Hadoop environment to execute complex database queries – is used as one of the workloads for the SPECvirt Datacenter 2021 benchmark. It consists of six VMs running on the SUT: a database server, a name node, and four data nodes. The provided template is used to configure all of these VMs using scripts included in the template. No modification to the template OS is allowed.

4.3 Client and svdc-director VMs¶

The client drivers used for the SPECvirt Datacenter 2021 benchmark reside on VMs created using the benchmark template. There is one client driver VM per tile of workload on the SUT. The provided template is used to configure the client VMs using scripts included in the template. No modification to the client VM is allowed.

The client VMs are expected to communicate with the SUT via external physical network connections to ensure the desired network load is created. Therefore, none of the client VMs may reside on the same hypervisor cluster and/or hosts as the SUT workload VMs.

All of the client VMs used by the benchmark communicate to a director controller VM, svdc-director. The svdc-director is created using the SPECvirt Datacenter 2021 template VM. The svdc-director cannot reside on the same hypervisor cluster and/or hosts as the SUT workload VMs. Please see the SPECvirt Datacenter 2021 User Guide for instructions on setting up the svdc-director.

Scripts are provided to deploy the client VMs automatically when the workload VMs are deployed. However, client VMs can be deployed manually if desired. Also, client VMs are not restricted to the same requirements as the workload VMs with regards to host and CPU pinning, i.e. clients may be bound to specific hosts and CPU cores if desired.

4.4 Measurement¶

4.4.1 Quality of Service¶

The SPECvirt Datacenter 2021 individual workload metrics represent the aggregate throughput that a server can support while meeting targeted quality of service (QoS) values and specific validation requirements. In the benchmark run, one or more tiles are run simultaneously. The load generated is based on synthetic departmental workload operations, OLTP database transactions, and BigBench processing operations as defined in the SPECvirt Datacenter 2021 Design Overview.

The QoS requirements are relative to the individual workloads. These requirements are explained in the following sections.

4.4.1.1 Departmental Workloads¶

Each of the departmental workloads (mailserver, webserver, collaboration servers) complete a certain number of transactions during the course of the benchmark’s Measurement Interval (MI), based upon the workload’s pre- defined stress pattern. The time to complete a given transaction is that transaction’s response time.

To achieve a compliant benchmark result, for each departmental workload:

Ninety percent of the transaction response times must be less than or equal to the designated 90th_max response time for that workload (shown below) for every tile.

Ninety-five percent of the transaction response times must be less than or equal to the designated 95th_max response time for that workload (shown below) for every tile.

Table 1. Departmental workload maximum response times:

Departmental workload |

90th_max (ms) |

95th_max (ms) |

|---|---|---|

mailserver |

150 |

170 |

webserver |

190 |

210 |

collaboration server |

385 |

425 |

For more details on the departmental workloads, please refer to Sections 5.1, 5.2, and 5.3 in the SPECvirt Datacenter 2021 Design Overview.

4.4.1.2 HammerDB Workload¶

HammerDB’s workload score is based upon the total number of New Order transactions completed during the benchmark’s MI. The rate of completion of these transactions is called the New Order Transactions Per Second (NO TPS).

To achieve a compliant benchmark result, the overall average NO TPS during the MI must be equal to or greater than the designated “NO TPS minimum” value of 200 for every tile.

For more details on the HammerDB workload and NO TPS threshold value, please refer to Section 5.4 in the SPECvirt Datacenter 2021 Design Overview.

4.4.1.2 BigBench Workload¶

The BigBench workload utilizes 11 different queries to stress the SUT. After completing these queries, BigBench cycles through the 11 queries again; this repeats until the workload is stopped or 6 cycles have been completed. BigBench’s workload score is based upon the total number of queries completed during the benchmark’s MI. The workload is stopped at the end of the MI.

To achieve a compliant benchmark result, each cycle of the 11 queries must finish within the designated “Max Cycle Time” of 4500 sec for every tile. Note that this requirement also applies to the final cycle, which may not be a complete query cycle.

For more details on the BigBench workload’s query set and the Max Cycle Time value, please refer to Section 5.5 in the SPECvirt Datacenter 2021 Design Overview.

4.4.2 Benchmark Parameters¶

Workload-specific configuration files are supplied with the harness. For a run to be valid, all the parameters in the configuration files must be left at the proscribed values.

The SPECvirt Datacenter 2021 benchmark’s primary configuration file is Control.config. This file is modified to conform to the benchmarker’s environment. For a valid run, all of the parameters defined below the “Fixed Settings” line must be unaltered from their original values.

For more details on the different parameters within Control.config, please refer to Appendix A in the SPECvirt Datacenter 2021 User Guide.

4.4.3 Deploying and Running SPECvirt Datacenter 2021 VMs¶

For SPECvirt Datacenter 2021 runs, the benchmark harness must use the pre- supplied template to auto-deploy the workload VMs to the SUT using benchmark- supplied scripts. No changes to the applications or OS within the VMs generated by these scripts can be made.

The same pre-supplied template must be used to create the svdc-director and client driver VMs.

4.4.4 SUT Tuning¶

Tuning of the underlying SUT hardware and hypervisor software is allowed for, within the restrictions detailed below and elsewhere in the RRR. These tunings can include, but are not limited to:

Host OS tuning

Host HW tuning

SUT storage tuning

SUT network tuning

Host FW and/or BIOS tuning

Hypervisor tuning

Guest resource allocation (within restrictions described in next section)

Restrictions:

Hypervisor settings and policies cannot explicitly bind VMs to specific cores/CPUs/hosts.

Hypervisor settings/policies are not allowed to explicitly prohibit VM migration

Hypervisor settings and policies are allowed which explicitly apply to specific SUT workload VMs, but must be applied only at the cluster or datacenter level within the hypervisor manager. Modifying settings of individual SUT VMs is not allowed.

Modifications to any SUT settings outside of the built-in harness tools during the benchmark measurement are not allowed. This includes changes to the hypervisor, SUT hosts and SUT VMs.

As cited in section 2.0 Philosophy, the optimizations implemented on the SUT are expected to be applicable for uses beyond enhancing the benchmark’s performance, i.e. no benchmark specials. It is understood that some optimizations which are legitimate may appear to violate the philosophy clause, and questions may arise during the result’s review. It is the submitter’s responsibility to provide sufficient justification or documentation for the use of such optimizations if questions are raised during the result’s review.

4.4.4.1 SUT Storage Requirements¶

The SUT must use common/shared storage for all workload VMs’ virtual disks. Restrictions and clarifications to shared storage requirements:

Each workload type – Mail, Web, Collaboration, BigBench, HammerDB – must utilize shared storage pool(s) for its VMs’ virtual disks.

If multiple storage pools are used for a single workload type, they may have different configurations (e.g. # disks, RAID configuration, etc.), but each pool must have the capacity to support the workload VMs’ virtual disks for 1/N tiles, where N is the number of storage pools used for that workload.

Multiple workload types may use the same shared storage pool(s).

Physical storage media may be local to or external to SUT hosts, but must be presented as a resource pool available to all SUT hosts.

The SUT must use stable and durable storage for all workload VMs’ virtual disks. The SUT hypervisors must reside on stable storage and be able to recover the virtual machines, and the VMs must also be able to recover their data sets without loss from multiple power failures (including cascading power failures), hypervisor and guest operating system failures, and hardware failures of components such as the CPU or storage adapter.

Examples of stable storage:

Media commit of data; i.e. the data has been successfully written to the disk media.

An immediate reply disk drive with battery-backed on-drive intermediate storage or an uninterruptible power supply (UPS).

Server commit of data with battery-backed intermediate storage and recovery software.

Cache commit with UPS.

Examples of non-stable storage:

An immediate reply disk drive without battery-backed on-drive intermediate storage or UPS.

Cache commit without UPS.

Server commit of data without battery-backed intermediate storage and recovery software.

Examples of durable storage:

RAID 1 - Mirroring and Duplexing

RAID 0+1 - Mirrored array whose segments are RAID 0 arrays

RAID 5 - Striped array with distributed parity across all disks (requires at least 3 drives).

RAID 10 (RAID 1+ 0) - Striped array whose segments are RAID 1 array

RAID 50 - Striped array whose segments are RAID 5 arrays

Examples of non-durable storage:

RAID 0 - striped disk array without fault tolerance

JBOD - just a bunch of independent disks with/without spanning

If an UPS is required by the SUT to meet the stable storage requirement, the benchmarker is not required to perform the test with an UPS in place. The benchmarker must state in the disclosure that an UPS is required. Supplying a model number for an appropriate UPS is encouraged but not required.

If a battery-backed component is used to meet the stable storage requirement, that battery must have sufficient power to maintain the data for at least 48 hours to allow any cached data to be committed to media and the system to be gracefully shut down. The system or component must also be able to detect a low battery condition and prevent the use of the caching feature of the component or provide for a graceful system shutdown.

Hypervisors are required to safely store all completed transactions to its virtualized workloads (including failure of the hypervisor’s own storage).

4.4.5 VM Resource Allocation for SPECvirt Datacenter 2021 Benchmark¶

The configuration of the SUT VMs is constrained by the following restrictions:

Memory allocation for VMs must not be changed from the default values used by the harness’ deployment scripts.

Only a single virtual network interface can be used by the SUT VMs.

The number of virtual CPUs for each VM type is defined by the associated parameter in Control.config.

Manual resource pinning or manual reallocation after VM is deployed is prohibited.

Settings and rules used control VM resource allocation for specific VM types or groups must be made at the cluster and/or datacenter level within the hypervisor manager’s control interface.

4.4.6 Modifications to the SPECvirt Datacenter 2021 Template¶

The SPECvirt Datacenter 2021 benchmark template is pre-configured to be able to deploy and configure VMs necessary for benchmark operation, including harness and SUT VMs. As such, the only modifications to the template permitted are to allow operation within the SUT environment, and for optimization within the constraints of the run and reporting rules.

Examples of prohibited template modifications are:

Modifications to guest OS within the template

Designating VMs start on specific host(s)

Designating VMs start with explicit NUMA pinning

Setting migration policies that prohibit automatic migration

Adding extra virtual disks or NICs.

Examples of allowed template modifications are:

Designating virtual network to be used

Designating virtual disk controller type to be used (e.g. virtIO, virtIO-SCSI)

Defining virtual CPU type to be used

Defining graphic controller type to be used

Defining Migration policies to be used, following restrictions described elsewhere in the run and reporting rules.

Note that the lists above are not comprehensive and any modifications made must be disclosed in the FDR and must adhere to the other sections of the run and reporting rules.

5.0 Reporting Results¶

5.1 Metrics And Reference Format¶

The primary performance metric for the SPECvirt Datacenter 2021 benchmark is SPECvirt® Datacenter-2021, which is derived from the set of compliant results reported by the workloads in the suite – mailserver, webserver, collaboration server, HammerDB, BigBench – for all tiles during the benchmark’s measurement interval.

The SPECvirt Datacenter 2021 benchmark’s Tile Score for each tile is a “supermetric” that is the weighted geometric mean of the normalized submetrics for each workload:

Tile Score = [mailserver^(0.11667)] * [webserver^(0.11667)] * [collabserver^(0.11667)] * [HammerDB^(0.30)] * [BigBench^(0.35)]

The Score per Host is the sum of all the <Tile Score> values divided by the number of hosts in the SUT:

Overall Tile Score = (tile score1) + (tile score2) + ... (tile scoreN)

Score per Host = <Overall Tile Score> / <numHosts>

The metric is output in the format SPECvirt ® Datacenter-2021 <Score per Host> per host @ <numHosts> hosts. For example:

SPECvirt ® Datacenter-2021 = 2.046 per host @ 4 hosts

Please consult the SPEC Fair Use Rule on the treatment of estimates.

The full disclosure report of results for the SPECvirt Datacenter 2021 benchmark is generated in HTML format by the provided SPEC tools. These tools may not be changed without prior SPEC approval. The tools perform error checking and flag some error conditions as resulting in an invalid run. However, these automatic checks are only there for debugging convenience, and do not relieve the benchmarker of the responsibility to check the results and follow the run and reporting rules.

The primary result file is generated with a .raw extension. This file contains details about the benchmark, including the raw data from the actual test measurement. The section of the .raw file that contains the raw data must not be altered. Corrections to the SUT descriptions may be made as needed to produce a properly documented disclosure.

SPEC reviews and accepts for publication on SPEC’s website only a complete and compliant set of results run and reported according to these rules. Full disclosure reports (FDRs) of all test and configuration details as described in these run and report rules must be made available. Licensees are encouraged to submit results to SPEC for publication.

5.2 Testbed Configuration Disclosure¶

All system configuration information required to duplicate a performance result submitted for publication must be reported. Tunings applied which are not in the default configuration for software and hardware settings must be reported. All SUT hosts and workload tiles must be tuned identically.

The file used to populate the benchmark’s result FDR with the configuration details of the testbed (SUT, benchmark svdc-director, and client drivers) is called Testbed.config and is located in /export/home/cp/bin on the svdc-director. Edit this file to report the required information described in the sections that follow.

5.2.1 SUT Hardware¶

The following SUT hardware components must be reported:

Vendor’s name (host node and shared storage)

System model name (host node and shared storage)

System firmware version(s), e.g. BIOS (host node)

Host node processor model, clock rate, number of processors (#cores, #chips, #cores/chip, on-chip threading enabled/disabled)

Host node main memory size and memory configuration if this is an end-user option which may affect performance, e.g. DIMM type, interleaving, and access time

Other hardware, e.g. server and/or storage enclosures, interconnect modules, write caches, other accelerators (host node, shared storage, networking)

Network switches and adapters

Number, type, model, and capacity of disk controllers and drives

Shared storage interconnect type, e.g. fibre channel, iSCSI, FCoE

Type of file system used for host and shared storage volumes

Hypervisor Manager host information, e.g. run as an appliance, host vendor name, system model, memory, CPU, other hardware.

5.2.2 SUT Software¶

The following SUT software components must be reported:

Hosts virtualization software (hypervisor) and all hypervisor-level tunings

Hypervisor manager software and all non-default tunings

Virtual machine details (number of virtual processors, memory, network adapters, disks, etc.)

Other clarifying information as required to reproduce benchmark results (e.g. list of active daemons, BIOS parameters, disk configuration, non-default kernel parameters, etc.), and logging mode, must be stated in the notes section.

5.2.3 Network Configuration¶

A brief description of the network infrastructure and configuration used to achieve the benchmark results is required. The minimum information to be supplied is:

Number, type, and model of host network controllers

Number, type, and role of networks used

Base speed of each network

Number, type, model, and relationship of external network components to support SUT (routers, switches, etc.)

Hypervisor management network configuration

VM network configuration

Storage network configuration, if applicable

The Network Configuration Notes section of the FDR should be used to list the following additional information:

Topology of harness and SUT networking, including:

Interconnect between client drivers and SUT networks, i.e. how clients are connected to SUT. For example: In each client host 1 x 1GbE NIC port is connected to 1 x “Superfast 48-port switch used for hypervisor management, and 1 x 25GbE NIC port is connected to a “Megafast 250xx 25GbE 24-port” switch used for the SUT workload.

Interconnect between SUT hosts and SUT networks. For example: In each host, 1 x 1GbE NIC port is connected to 1 x “Superfast 48-port switch used for hypervisor management, 1 x 25GbE NIC port is connected to a “Megafast 250xx 25GbE 24-port” switch used for the SUT workload, and 1 x 25GbE NIC port is connected to 1 x “Megafast 252xx 25GbE 24-port” switch used for SUT VM migration.

Description of any vLAN configuration utilized for SUT networking

Description of all virtual network configuration used by SUT including port mapping, network roles, and network tuning,

5.2.4 Clients¶

The following client driver host hardware components must be reported:

Number of physical client systems used as hosts for all load drivers and the svdc-director

Operating System and/or Hypervisor and Version

System model number(s), processor type and clock rate, number of processors

Main memory size

Network Controller(s)

Other performance critical Hardware

Other performance critical Software

In the Client Notes section, describe the relationship between the client hosts and client VMs:

Number of vCPUs used for each client VM

Use of host and/or CPU pinning for client VMs.

If pinning is used, mapping of client VMs to specific hosts.

Location of svdc-director VM

5.2.5 General Availability Dates¶

The dates of general customer availability must be listed for the major components: hardware, software (hypervisor, operating systems, and applications), month and year. All the system, hardware and software features are required to be generally available on or before date of publication, or within 3 months of the date of publication (except where precluded by these rules). With multiple components having different availability dates, the latest availability date must be listed.

Products are considered generally available if they are orderable by ordinary customers and ship within a reasonable time frame. This time frame is a function of the product size and classification, and common practice. The availability of support and documentation for the products must coincide with the release of the products.

Hardware products that are still supported by their original or primary vendor may be used if their original general availability date was within the last five years. The five-year limit is waived for hardware used in clients.

For ease and cost of benchmarking, storage and networking hardware external to the server such as disks, storage enclosures, storage controllers and network switches, which were generally available within the last five years but are no longer available from the original vendor, may be used. If such (possibly unsupported) hardware is used, then the test sponsor represents that the performance measured is no better than 105% of the performance on hardware available as of the date of publication. The product(s) and their end-of- support date(s) must be noted in the disclosure. If it is later determined that the performance using available hardware to be lower than 95% of that reported, the result shall be marked non-compliant (NC).

In the disclosure, the benchmarker must identify any component that is no longer orderable by ordinary customers.

If pre-release hardware or software is tested, then the test sponsor represents that the performance measured is generally representative of the performance to be expected on the same configuration of the release system. If it is later determined that the performance using available hardware or software to be lower than 95% of that reported, the result shall be marked non-compliant (NC).

5.2.6 Test Sponsor¶

The reporting page must list the date the test was performed, month and year, the organization which performed the test and is reporting the results, and the SPEC license number of that organization.

5.2.7 Notes¶

This section is used to document performance-relevant configuration and tuning information needed to reproduce the benchmark result that is not provided in the other sections of the result disclosure.

Examples of the type of information that should be noted:

System tuning parameters other than default

Process/daemon tuning parameters other than default

MTU size of the SUT network used

Background load, if any, on hosts

Critical customer-identifiable firmware or option versions such as network and disk controllers

Additional important information required to reproduce the results, which do not fit in the space allocated for fixed report fields must be listed here.

If the configuration is large and complex, added information must be supplied either by a separate drawing of the configuration or by a detailed written description which is adequate to describe the system to a person who did not originally configure it.

Part numbers or sufficient information that would allow the end user to order the SUT configuration if desired.

The Notes section is subdivided into sections for HW, SW, Client Driver, and miscellaneous notes. The HW and SW sections are further divided into sections for notes on SUT hosts (HW, SW), Management server (HW, SW), storage (HW), and network (HW).

At the top of the Notes is a section used for global notes which apply to the result in general and need to be prominently displayed. An example of this type of note is the security vulnerablity mitigation statements which need to be reported on OSG benchmarks (see: Security Mitigations and SPEC benchmarks).

The layout of the notes section, along with their corresponding fields in Testbed.config is as follows:

- Notes:

NOTES[]

Hardware Notes:

- Compute Node:

HOST.HW.NOTES[]

- Storage:

STORAGE.NOTES[]

- Network:

NETWORK.NOTES[]

- Management Node:

MANAGER.HW.NOTES[]

Software Notes:

- Compute Node:

HOST.SW.NOTES[]

- Management Node:

MANAGER.SW.NOTES[]

- Client Driver Notes:

CLIENT.NOTES[]

- Other Notes:

OTHER.NOTES[]

6.0 Submission Requirements for SPECvirt Datacenter 2021 Results¶

Once you have a compliant run and wish to submit it to SPEC for review, you need to provide the following:

The reporter-generated submission (.sub) file containing ALL the information outlined in Section 5.2

The output of configuration gathering script(s) that obtain the configuration information from the SUT, clients, and all workload virtual machines as described in sections 6.1, 6.2, and 6.3 below. The built-in harness tools collect the necessary supporting files – based on the selected $virtVendor – and create a single [run-dir]-support.tgz file, if the Control.config variable collectSupportFiles is set to “1”. Note: the value of collectSupportFiles must be set to “1” for a valid run.

Please email the SPECvirt Datacenter 2021 submissions to subvirt_datacenter2021@spec.org.

To publicly disclose SPECvirt Datacenter 2021 results, the submitter must adhere to these reporting rules in addition to having followed the run rules described in this document. The goal of the reporting rules is to ensure the SUT is sufficiently documented such that someone could reproduce the test and its results.

Compliant runs need to be submitted to SPEC for review and must be accepted prior to public disclosure. If public statements using the SPECvirt Datacenter 2021 benchmark are made they must follow the SPEC Fair Use Rules.

During a review of the result, the submitter may be required to provide, upon request, additional details of the testbed configuration that may not be captured in the above script to help document details relevant to questions that may arise during the review. It is therefore highly recommended that the testbed for the result remain available during the review.

6.1 SUT Configuration Collection¶

The $virtVendor script collectSupport.sh collects all of the necessary supporting information about the SUT hosts and hypervisor manager. The level of detail provided is of the same magnitude as that provided to the hypervisor vendor for issue support – e.g. vm-support tarball for VMware, SOS report for RHV – for each SUT host and the hypervisor manager.

The primary reason for this step is to ensure that there are not subtle differences that the vendor may miss in the result disclosure.

Note: SPEC understands that occasional issues may arise during the collection of the supporting tarball’s information which results in an absent or malformed supporting tarball. As such, it is permissible to re-run the $virtVendor/collectSupport.sh after the run to regenerate the supporting tarball with the following restrictions:

No modifications were made to any SUT host or to the hypervisor manager after the benchmark ran and prior to re-running the collectSupport.sh script.

All log files collected by the collectSupport.sh script from the SUT hosts and hypervisor manager must contain all messages generated during the entire benchmark’s operation for result to be submitted.

The collectSupport.sh script may only be run for the most recent benchmark result run on the harness.

6.2 Client Configuration Collection¶

The client driver VMs should be generated from the benchmark template. Validation checks are performed during the benchmark to ensure that no changes have been made to the guest environment. As such, no additional supporting files are required to be. The client host(s) configuration should be documented in the Client Notes section of the benchmark result disclosure, but no supporting files from the host(s) are required.

6.3 SUT workload Guest Configuration Collection¶

The SUT workload VMs are generated from the benchmark template. Validation checks are performed during the benchmark to ensure that no unexpected changes have been made to the guest environment. As such, no additional supporting files are required.

6.4 Sub file syntax checker tool¶

When a submission (.sub) file is submitted to SPEC for review, it undergoes a preliminary verification scan to ensure the result file passes a minimum set of quality checks before it enters review. The benchmark template provides a tool which the user can run on a submission file to verify whether the result will pass these initial validation checks. The tool, check_sub_syntax.pl, is located in ${CP_HOME}/tools. To run the tool on a given .sub file, use the command:

${CP_HOME}/tools> ./check_sub_syntax.pl -s virt_datacenter2021.syntax <.sub file>

If there are no issues with the .sub file you should receive a “PASSES!” message. If issues are detected, you will receive messages indicating which field(s) in the .sub file encountered problems and the reason for the failure.

Note Using this tool does not guarantee your submission will be accepted for publication. It is simply a way to detect common issues encountered in submissions before the result enters formal review.

7.0 SPECvirt Datacenter 2021 Benchmark Kit¶

SPEC provides client driver software, which includes tools for running the benchmark and reporting its results. The client drivers are written in Java; precompiled class files are included with the kit, so no build step is necessary. Recompilation of the client driver software is not allowed, unless prior approval from SPEC is given.

This software implements various checks for conformance with these run and reporting rules; therefore, the SPEC software must be used as provided. Source code modifications are not allowed, unless prior approval from SPEC is given. Any such substitution must be reviewed and deemed performance-neutral by the OSSC.

The kit also includes source code for the file set generators, script code for the web server, and other necessary components.

Appendix A. Submitting a New SPECvirt Datacenter 2021 Hypervisor Toolset¶

SPECvirt Datacenter 2021 benchmark allows for the use of toolsets other than the default two included on the template VM – RHV and vSphere – if necessary to support other hypervisor vendors or updated releases of hypervisor or hypervisor manager products. New toolsets must conform the following rules:

The new toolset may only be on the svdc-director. The template(s) used for the SUT client VM deployment must not be altered.

Only changes or additions needed to support the new toolset may be made to the svdc-director’s guest environment. Any changes to the environment must be reviewed and accepted by the SPEC Virtualization subcommittee prior to first publication of results using new toolset. This review may be done as part of the submission process for the first result using the new toolset.

New toolsets must conform the following additional rules:

The new toolset cannot reside in the directory of a previously provided or approved toolset.

The new toolset must reside completely within a new single directory tree in ${CP_HOME}/config/workloads/specvirt/HV_Operations. The name of the toolset directory (relative to ${CP_HOME}/config/workloads/specvirt/HV_Operations not full path) is used as the value used for virtVendor in Control.config. Subdirectories under the ${virtVendor} directory are allowed.

Primary bash scripts of the names described in Table A.1 below must be provided and accept the input parameters indicated in order to support the benchmark harness.

Input parameters for the primary scripts must be provided in the indicated order.

These scripts may invoke other scripts/commands which make the appropriate API calls to the Hypervisor manager. The supporting scripts do not have to use bash, but do need to be invoked from the primary bash script.

Sourcing the script ${CP_BIN}/getVars.sh makes all of the variables in the ${CP_BIN}/Control.config available as environmental variables to the primary control scripts. Please refer to default toolsets for examples on how to use this supporting script.

The scripts DeployVM-AIO.sh, ExitMaintenanceMode.sh, and PowerOnVM.sh must redirect Standard output (stdout) and append it to ${CP_HOME}/log/${virtVendor}_scripts.log for benchmark validation purposes. Other ${virtVendor} scripts may redirect stdout to the same log file, but they are not required to do so.

Table A.1. Primary Scripts and Input Parameters Required for all Toolsets¶

Script Name |

Input Parameters |

Invoked By |

Comments |

|---|---|---|---|

AddVMDisk.sh |

<disk size in GB> |

common/AddDirectorDisk.sh |

Adds secondary vdisk to svdc-director VM during initial creation |

collectSupport.sh |

[none] |

common/endRun.sh |

collects files necessary for a submitted result’s supporting tarball from hosts and hypervisor manager. |

DeleteVM.sh |

<VM name> |

bin/prepTestRun.sh,

common/DeleteSUTTile.sh,

commmon/DeployClientTile.sh,

common/DeployClientTile-custom.sh

DeployCLientTiles.sh,

prepSUT.sh,

DeployHammerDBTile.sh,

DeployBBTile.sh,

DeleteTile.sh

|

Deletes indicated VM |

DeployVM-AIO.sh |

<VM name>

<MAC address>

|

bin/genConfig.sh |

Deploys indicated departmental workload VM with indicated MAC. Script needs to handle <workload>StoragePool[] parameter(s) in Control.config to evenly distribute VMs across all defined storage pools for that workload, depending on tile number. |

DeployVM-BB.sh |

<tilenum> |

common/DeployBBTile.sh |

Deploys BigBench workload VMs for indicated tile Script needs to handle BBstoragePool[] parameter(s) in Control.config to evenly distribute VMs across all defined storage pools for that workload, depending on tile number. |

DeployVM-Client.sh |

<tilenum> |

common/DeployClientTiles.sh common/DeployClientTile-custom.sh |

Deploys client driver VM for indicated tile Script needs to handle clientStoragePool[] parameter(s) in Control.config to evenly distribute VMs across all defined storage pools for that workload, depending on tile number. |

DeployVM-ClientToHost.sh |

<tilenum>

<hostname>

<storage pool>

|

common/DeployClientTile-custom.sh |

Deploys client driver VM for indicated tile to specified host and storage pool |

DeployVM-HDB.sh |

<tilenum> |

common/DeployHammerDBTile.sh |

Deploys HammerDB workload VMs for indicated tile Script needs to handle HDBstoragePool[] parameter(s) in Control.config to evenly distribute VMs across all defined storage pools for that workload, depending on tile number. |

EnterMaintenanceMode.sh |

<hostname> |

bin/prepTestRun.sh,

common/prepSUT.sh,

common/prepSUT-AddTile.sh

|

Puts indicated host into maintenance mode |

ExitMaintenanceMode.sh |

<hostname> |

bin/genConfig.sh |

Brings indicated host out of maintenance mode |

getDCInfo.sh |

<client|SUT> |

bin/prepTestRun.sh |

Gathers hypervisor manager information if needed for toolset scripts. If no information is needed, leave script empty |

PowerOffAllTiles.sh |

[none] |

common/PowerOffAllTiles.sh |

Used to shut down all VMs in SUT cluster which have a name beginning with svdc-t### |

PowerOffVM.sh |

<VM name> |

bin/shutdownSUTTile.sh

common/DeployClientTile-custom.sh

|

Shuts down indicated VM |

PowerOnVM.sh |

<VM name> |

bin/genConfig.sh,

bin/prepTestRun.sh

|

Powers on indicated VM. |

setupPasswordlessLogin.sh |

[none] |

common/setupPasswordlessLogin.sh |

Used to set up passwordless ssh access to all SUT hosts, and optionally, client hosts. The list of hosts to configure should be enumerated in /etc/hosts, with the string “Host” in their names. This access may be necessary to collect data from the hosts for the supporting tarball. |

sutConfig.sh |

<0|1> |

${virtVendor}/startRun.sh,

${virtVendor}/endRun.sh

|

Used to collect necessary hypervisor environment data for benchmark results. Data is saved in ${rundir}/config/ <start/end}Run.config. See below for required format of <start/end>Run.config |

userInit.sh |

[none] |

${initScript} in Control.config |

Sample user script to perform pre-benchmark monitoring tasks, such as starting performance monitoring scripts |

userExit.sh |

[none] |

${exitScript} in Control.config |

Sample user script to perform post-benchmark monitoring tasks such as collecting output of performance monitoring scripts |

vmExist.sh |

<VM name> |

${virtVendor}/DeployVM-AIO.sh |

Used to verify the indicated VM exists. Should return “pass” if VM exists and “fail” otherwise |

A.1 Format of startRun.config and endRun.Config Files¶

The files startRun.config and endRun.config are used to report details of and perform compliance checks on the SUT environment. These two files must be created by the sutConfig.sh script and/or supporting scripts it invokes. Table A.2 shows what fields need to be included and populated in startRun.config and endRun.sh. Some fields are indexable, which allows for multiple entries of the fields. For the SUT.VM.xxx indexable fields, all fields with the same index value must be associated with the same VM. Likewise, all SUT.HOST.xxx indexable fields with the same index value must be associated with the same SUT host.

Table A.2 Required Fields for startRun.config and endRun.config Files¶

Field Name |

Indexable |

In startRun.config |

In endRun.sh |

Comments |

|---|---|---|---|---|

SUT.Host.name[] |

Yes |

Yes |

Yes |

Name of host in SUT |

SUT.Host.state[] |

Yes |

Yes |

Yes |

State of SUT host e.g. Up, Maintenance, Down, etc. |

SUT.Host.offline.Total |

No |

Yes |

Yes |

Number of hosts in SUT cluster in maintenance mode. This value in startRun.config is compared to the number of offline hosts defined in Control.config for validation. |

SUT.Host.online.Total |

No |

Yes |

Yes |

Number of hosts in SUT cluster that are online. |

SUT.Storage.Pool[] |

Yes |

Yes |

Yes |

Name of Storage pool that is available to SUT hosts. All storage pools defined as workload storage pools in Control.config must be in list |

SUT.Cluster.name[] |

Yes |

Yes |

Yes |

Name of cluster in datacenter used by SUT. Cluster defined as SUT cluster in Control.config must be in list |

SUT.Datacenter.name[] |

Yes |

Yes |

Yes |

Name of datacenter used by SUT cluster |

SUT.VM.name[] |

Yes |

Yes |

Yes |

Name of VM in SUT cluster |

SUT.VM.state[] |

Yes |

Yes |

Yes |

Operational state of VM in SUT cluster |

SUT.VM.vCPU[] |

Yes |

Yes |

Yes |

Number of vCPUs defined for VM in SUT cluster. Value is checked for SUT workload VMs to verify it matches the defined value in Control.config |

SUT.VM.memoryMB[] |

Yes |

Yes |

Yes |

Amount of memory defined for VM in SUT cluster |

SUT.VM.running.total |

No |

Yes |

Yes |

Total number of VMs running on SUT cluster |

SUT.VM.down.total |

No |

Yes |

Yes |

Total number of VMs powered down on SUT cluster |

Benchmark.VM.running.total |

No |

Yes |

Yes |

Total number of VMs running on SUT cluster which match a workload VM name. Value in startRun.config must be 0. Value in endRun.config must match number of VMs used by $numTilesPhase3 defined in Control.config |

Benchmark.VM.down.total |

No |

Yes |

Yes |

Total number of VMs powered down on SUT cluster which match a workload VM name. |

SUT.VM.migration.total |

No |

No |

Yes |

Number of VM migrations which occured during benchmark measurement interval. |

Benchmark.test.start |

No |

Yes |

No |

Start of benchmark execution. Format must be valid date/time string output, but is otherwise open. For example both “-u %y-%m-%dT%T” and “%c” are acceptable. |

Benchmark.test.end |

No |

Yes |

No |

End of benchmark execution. Format must be valid date/time string output, but is otherwise open. For example both “-u %y-%m-%dT%T” and “%c” are acceptable. |

Note:

Additional SUT configuration data may be collected by sutConfig.sh – for example to provide reference information to use when filling out the benchmark result’s FDR – but this additional data should not be placed into startRun.config or endRun.config or any other benchmark harness files.

A.2 Procedure for Submitting a New Toolset¶

A new toolset must be validated and approved by the SPEC virtualization committee before it can be used for benchmark publications. To accomplish this, a compliant SPECvirt Datacenter 2021 result must be submitted to the committee which uses the new toolset. The new result may be for a new benchmark result for publication, or solely intended to validate the toolset; if the latter is intended, a single tile result is sufficient. The result goes through a normal review cycle with special attention made to the toolset. The result must be accepted by the committee at the end of the review but does not need to be published.

As part of the submission a manifest file needs to be provided which includes a list of all of the files in the toolset along with their MD5SUM values. This manifest file is used for future submissions utilizing the new toolset to ensure the toolset has not been altered. If alterations to the directory need to be made to support the toolset, instructions need to be included within the toolset detailing the steps to configure the director.

After the new toolset has been approved, it is posted to https://www.spec.org/virt_datacenter2021/docs/sdk.html to be used by other SPECvirt Datacenter 2021 users. It is expected that the new toolset is accessible to all benchmark license holders who may wish to utilize it. If there is concern about posting the toolset to SPEC’s website, instructions need to be provided on how interested parties can obtain the new toolset. These instructions are to be posted on the SDK page at SPEC in lieu of the actual toolset tarball.

A.2.1 Considerations When Submitting a New Toolset¶

New toolsets are presumed to be complete and final for the hypervisor manager version(s) they were designed for. If future need arises to update, correct, or amend a toolset, a new toolset package needs to be submitted to SPEC following the procedure described above. The updated toolset needs to use a new ${virtVendor} directory name to distinguish the toolsets. It is understood that for some scripts it is useful to have consistent variable definitions of toolset directories, so it is permissible to use environment variables which are named the same between toolset versions to define the toolset path for the scripts to utilize. However, the ${virtVendor} variable value must be unique.

Appendix B. External Workload References¶

The SPECvirt Datacenter 2021 benchmark uses modified versions of Big Bench and HammerDB for its virtualized workloads. For reference, the documentation for those benchmarks are listed below:

HammerDB: HammerDB Documentation

BigBench: BigBench README.md

Note:

Not all of the restrictions or run rules described in the documentation referenced above are applicable to SPECvirt Datacenter 2021 results, but when a compliance issue is raised, SPEC reserves the right to refer back to these individual benchmarks’ run rules as needed for clarification.

SPEC and the names SPECvirt, SPEC virt_sc, and SPEC virt_sc2013 are trademarks of the Standard Performance Evaluation Corporation.

Additional product and service names mentioned herein may be the trademarks of their respective owners.

Copyright 2021 Standard Performance Evaluation Corporation (SPEC).

All rights reserved.